Consolidated SD-WAN Design Guide

- Last updated

- Save as PDF

Introduction to SD-WAN Design

![]() For supported software information, click here.

For supported software information, click here.

The Versa Networks SD-WAN solution is a highly robust and flexible platform that offers capabilities to address various SD-WAN use cases.

This series of SD-WAN design articles addresses the most common SD-WAN use cases, and they describe the Versa Networks recommendations and best practices for SD-WAN deployments. The objective is to help achieve a standardized approach to designing Versa Networks SD-WAN solutions. Note that these articles are not meant to cover detailed operational best practices nor to act as a service management manual.

These articles are targeted at network architects, engineers, administrators, and other technical audiences interested in designing, implementing, and deploying Versa Networks SD-WAN solutions. These articles assume that you are familiar with the basics of Versa Networks products and that you have a working knowledge of the Versa Network SD-WAN architecture as well as the wider Versa Networks ecosystem.

These articles are intended to be only a generic guide so that you can use them to explore use cases for your specific deployment. The articles do not cover every use case that the Versa Networks secure SD-WAN solution supports.

The designs and best practices described in these articles are based on the Release 20.2.2 software.

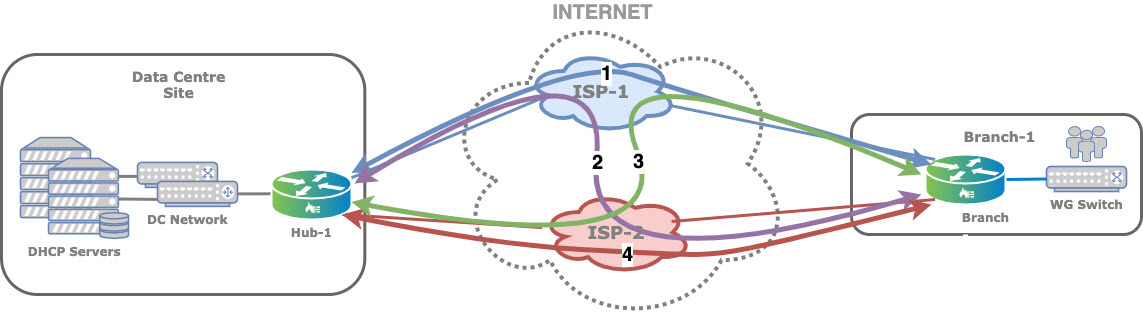

Reference Network Architecture

The designs in these SD-WAN articles are based on the reference network architecture shown in the following figure. The figure shows a high-level blueprint of a typical network topology built using the Versa Networks SD-WAN solution. This topology has one headend, one data center, and three remote branches. The headend consists of a Versa Analytics cluster, a Versa Director, and two Versa Controller nodes. Other components of the topology are a legacy network and a cloud proxy. There are three transport networks in the figure, MPLS, Internet, and LTE, and they are provided by one or more service providers. The network orchestration provided by the headend is reachable through Versa Networks Controller nodes, which are connected to all transport networks. Versa Director and Versa Analytics are hosted in the headend.

SD-WAN Design Guide Articles

This SD-WAN design guide includes the following articles:

- SD-WAN Headend Design Guidelines—Provides architectural and deployment guidelines for the Versa Networks Versa Analytics cluster, a Versa Director, and a Versa Controller headend components.

- Branch Deployment Options—Describes the most common transport-based branch deployment scenarios.

- LTE Transport Modes—In many scenarios, LTE is deployed as a backup path because its data costs are higher. This article discusses the LTE hot standby and cold standby mode.

- SD-WAN Overlay Networks—Discusses the IP addressing scheme for the overlay network and whether to have data traffic to follow the encrypted overlay,

- SD-WAN Topologies—Discusses the SD-WAN overlay topologies, which are full mesh, hub and spoke, regional mesh, and multi-VRF (also called multitenancy).

- VOS Edge Device Direct Internet Access—Describes the breaking out of traffic to the internet at the branch, which is called direct internet access, or DIA.

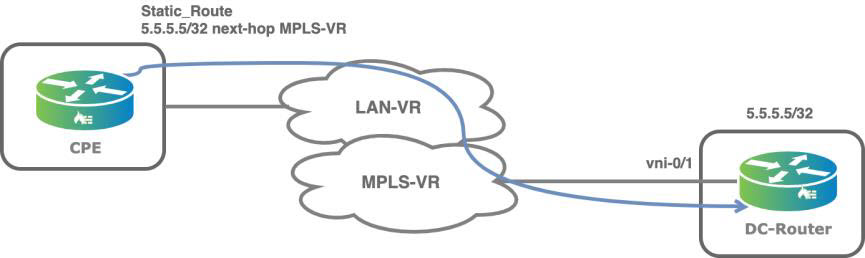

- SD-WAN Gateway Use Cases—Describes the three main use cases for VOS edge devices that act as SD-WAN gateways, which are connecting to sites on an MPS Layer 2 VPN network, connecting to sites over disjointed underlay networks, and acting as a gateway for internet-bound traffic.

- SD-WAN Traffic Optimization—Discusses how to use traffic steering, traffic conditioning, and SD-WAN path policies to optimize SD-WAN traffic flow.

- VOS Edge Routing Protocols—Describes common use cases for static and dynamic routing protocols.

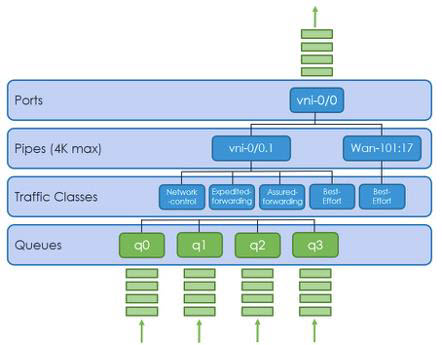

- QoS—Discusses how to configure quality of service (QoS), also known as class of service, or CoS, to ensure that the network prioritizes business critical traffic over less important traffic and treat this traffic with higher priority. You can also use QoS for other tasks, such as policing, shaping, and remarking the QoS bits in the IPv4/IPv6 and VLAN headers.

Supported Software Information

Releases 20.2 and later support all content described in this article.

SD-WAN Headend Design Guidelines

![]() For supported software information, click here.

For supported software information, click here.

This article provides architectural and deployment guidelines for the Versa Networks headend components. The headend consists of a Versa Analytics cluster, a Versa Director, and a Versa Controller.

Design Considerations for Headend Architecture

A typical Versa SD-WAN headend architecture is fully resilient, providing geographical redundancy. When you deploy the headend in a public cloud, you deploy it in different availability zones. For these deployments, the infrastructure must provide the capability for interconnectivity among the headend components.

The SD-WAN headend topology has the following components:

- Northbound segment, for management traffic

- Southbound segment, for control traffic

- Service VNF router to interconnect data centers

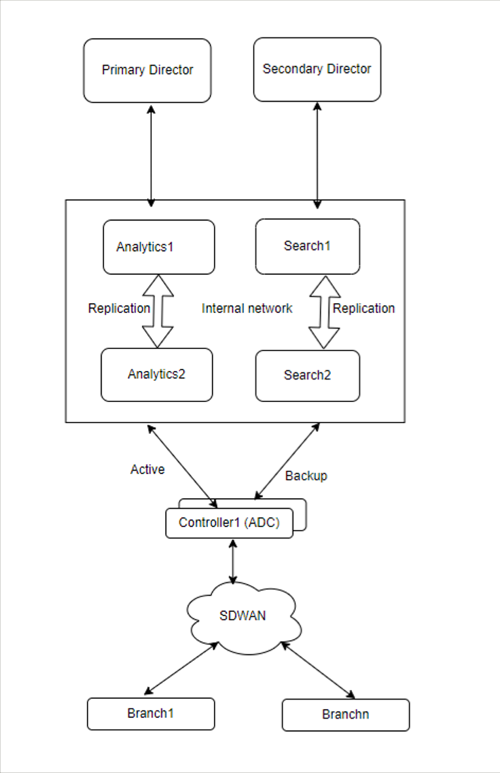

The following figure illustrates the headend components, which shows a topology that has headend components in two geographically separated data centers.

Administrators and other OSS systems use the northbound network to access the Versa Director, Analytics GUI, and REST APIs. The northbound network is also used to maintain synchronization between Versa Directors in a high availability cluster. Controller nodes require the northbound network to connect to Versa Director over the out-of-band (eth0) interface so that the Versa Director can provision the Controller nodes.

The southbound network, or control segment, connects to the SD-WAN overlay through the Controller nodes. Because the Director and Analytics nodes are hosts and not routers, the topology requires an additional router to provide a redundant connection to both Controller nodes. A dynamic routing protocol (either OSPF or BGP) must be enabled on this router to provide accurate overlay IP prefix reachability status, and BFD must be enabled towards the service VNF device in the data center. This additional router can be an existing router in the data center network, or it can be a VOS edge device managed by Versa Director.

The solution assumes reachability between the data center networks over the Director northbound operations support system (OSS) network. If the control network service router experiences an outage, the Director node can be accessible using the northbound network, and the administrator can gracefully fail over to the standby Director node.

Note: It is not mandatory to always have the Controller nodes connect to all transport domains. Technically, the SD-WAN network can function if an SD-WAN branch device is connected to the Controller node over just one underlay. However, from a resiliency point of view, it is recommended that the Controller node connect directly to all the transport domains that you use.

Note: The Director northbound OSS network must be separate from the control network to avoid the possibility that a split-brain state arises between the Director nodes. In a split-brain scenario, both Director nodes are up but network reachability between them is lost. The results is that both Director nodes assume that they are the active nodes.

If internet access is enabled to the Versa Director then you must secure the northbound traffic with appropriate security. Where users are able to access Versa Director directly from the internet such as the cloud-hosted Versa Directors, you must use a third dedicated interface into a DMZ. This allows separation between the link used for Director Sync and the user internet access to the Director.

The following figure shows a simple single data center deployment that is not geographically dispersed and in which service VNF routers are not required. This topology uses flat Layer 2 LANs for both the northbound and control networks, and it uses VRRP in the control LAN for gateway redundancy. However, this design is not suitable for creating Layer 3 security zones at the headend. If security is required, you must configure firewalls in bridge mode.

Headend Deployment Options

You can deploy the typical headend architectures shown above as host OSs on physical hardware, which is called a bare-metal deployment. You can also deploy these headend architectures in a virtualized architecture, for which Versa Networks supports ESXi and KVM, and the AWS, Azure, and Google Cloud Platform public clouds.

Deploying the headend on bare-metal hardware provides better performance and scalability, typically, 20 to 30 percent over a virtualized architecture. However, deploying on physical hardware has hardware dependencies. For example, not all server hardware, such as special RAID controllers, is supported.

For both bare-metal and virtualized deployments, it is important to follow the hardware requirements described in Hardware and Software Requirements for Headend.

Firewall Dependencies for Headend Deployment in a Data Center

When you implement the headend in an existing data center, several protocols and functions are required to connect to the data center. You must follow the requirements for connectivity, reachability, and security. For more information on firewall requirements, see Firewall Requirements.

Best Practices for Hardening the Headend

If you deploy Versa Directors that are exposed to the internet so that external users can access them, you must apply common IT hardening practices. This includes installing Ubuntu OS patches, installing official certificates (Versa Networks software ships with self-signed certificates), and changing the default passwords. Note that you must use the OS patches provided by Versa and not install OS patches directly from Ubuntu.

For more information, see the following articles:

Versa Analytics Deployment Options

The minimum recommended Versa Analytics deployment for full redundancy requires a cluster of four Analytics nodes, as illustrated in the figure below. This design provides active-active high availability (HA), with two nodes having the Search personality and two nodes having the Analytics personality.

Redundancy is achieved by replicating the data between one or more nodes of the cluster. Network latency must be low to optimize storage and query performance. This is because there is a large amount of data movement between the nodes of the cluster. Therefore, it is recommended that you allocate the nodes of the same cluster in the same data center, or at least within the same availability zone. Note that synchronization of the Cassandra database requires less than 10 milliseconds of latency between the nodes in the same cluster.

Recommended Production Analytics Deployment

To provide geographical resilience and for disaster recovery, you can add a second Versa Analytics cluster. You can set one cluster as a primary and the other as a secondary in the following redundant configurations.

Analytics Cluster Redundancy Options

You can configure a primary and secondary cluster in active-active or active-standby mode, or configure the secondary cluster for log redundancy only. We recommend that primary and secondary clusters run in separate datacenters. We do not recommend you separate the nodes a single cluster across multiple regions due to latency and performance issues. Running separate clusters per region helps manage these clusters independently and avoid downtime for storing and retrieving data during maintenance and node failures.

You can configure pairs of Analytics clusters in the following modes:

- Full Redundancy in Active-Active Mode—In this configuration, primary and secondary clusters have identical numbers of analytics, search, and log forwarder nodes. VOS devices are configured to send logs to both these independent clusters simultaneously. For detailed information about configuring clusters in active-active mode, see Configure a Secondary Cluster for Log Collection.

- Advantages:

-

Highly available.

-

The Analytics application can access all historic data from the secondary cluster when the primary cluster is down.

-

-

Limitations:

-

Running the entire database on a secondary cluster increases costs and operational complexity.

-

There is no cluster-to-cluster synchronization. If the primary cluster goes down, data may be retrieved from secondary cluster. However, when the primary cluster comes back up, there is no automatic synchronization of the data that the secondary cluster received during the downtime.

-

-

Sample Topology: A sample topology used for both active-active and active-standby modes is illustrated in the figure below. Note that although the topology is identical for the two modes, the software configuration differs for Controllers and VOS devices (branches).

- Advantages:

- Full Redundancy in Active-Standby Mode—In this configuration, primary and secondary clusters have identical number of analytics, search, and log forwarder nodes. VOS devices are configured to send one copy of the logs to Controllers. ADC load balancers on the Controllers are configured to send logs to only the primary cluster when all its Analytics nodes are up. When Analytics nodes are down, the load balancers automatically send logs to the Analytics cluster in the secondary data center. For information about configuring clusters in active-standby mode, see Configure a Secondary Cluster for Log Collection.

- Advantages:

- The Analytics application can access data from the secondary cluster when the primary cluster is down. In this mode, only data collected during the primary cluster down time is displayed.

-

Limitations:

-

Running the entire database on a secondary cluster increases costs and operational complexity.

-

The secondary cluster does not have data collected before the primary cluster went down.

-

When primary cluster operation resumes, you must switch between the clusters to get the consolidated data.

-

-

Topology: The topology for active-standby mode is identical to active-active mode.

- Advantages:

-

Log Redundancy Mode—In this configuration, the secondary cluster has only the log forwarders running. You configure ADC load balancers on Controllers to automatically forward logs to a secondary datacenter when all the nodes of the primary datacenter are down. The log forwarders in the secondary datacenter receive the logs and archive them. When the primary datacenter comes back up, you can run a cron script on the secondary log forwarders to transfer and populate the logs back to the primary Analytics cluster. Analytics nodes include a cluster synchronization tool to assist in the log transfer. For more information, see Cluster Synchronization Tool in What's New in Release 20.2.

-

Advantages:

-

No need to run the full Analytics cluster in the secondary datacenter and keep it in sync with the primary cluster in anticipation of the datacenter going down.

-

Saves on costs, without losing any data.

-

-

Limitations:

-

Until the primary cluster comes back up, Versa Analytics reports are not visible.

-

-

Sample Topology:

-

Role of the Log Forwarder

The Versa Operating SystemTM (VOSTM) edge devices generate logs destined for the Analytics database. These logs are carried in an IPFIX template and are delivered to the log forwarder. The log forwarder removes the IPFIX template overhead and stores the logs in clear text on log collector disk. The Analytics database servers can then access the logs from the log forwarder. Optionally, logs can be forwarded in syslog format to third-party log forwarders.

The log forwarder function is part of the Versa Analytics node. It can run on analytics or search nodes or on standalone log forwarder nodes, where a separate VM or server is dedicated to this role. For larger deployments, especially for deployments with 1000 or more branches, it is recommended that you separate the log forwarder function, because it provides better scalability for the Analytics platform. When the log forwarder runs on a separate device, you typically deploy it south of the analytics or search nodes, as shown in the following figure.

Best Practices for Versa Analytics Sizing

The scaling of a Versa Analytics cluster is driven by the logging configuration for the individual features enabled on VOS edge devices. Most features include an optional logging configuration to log events related to a specific rule or profile. The number of features for which you enable logging and the volume of logs that each feature generates has a direct impact on the sizing and scalability of the Versa Analytics cluster.

Versa Analytics has two distinct database personalities, Analytics node and Search node. The Analytics node delivers the data for most of the dashboards shown in the Analytics GUI. These data are aggregated data, and the data aggregation is enabled by default. Therefore, the dashboards are populated without configuration on the VOS edge devices. Analytics node scaling is determined by granularity of the reported data, which is usually set between 5 and 15 minutes. The topology also affects the scaling of the Dashboard feature. A full-mesh topology with multiple underlays generates more SLA logging messages compared to a hub-spoke topology with a single underlay. This is because SLA monitoring between branches in the underlay contributes significantly to the log volume as it reports SLA measurements to the Analytics node.

The primary tasks of the Search node are to store logging and event data, and to drive the log sections and log tables on the Dashboard. These functions are heavily utilized, because every event in the network triggers a log entry on the Search node. In a minimal default configuration, the only information that is logged to the search nodes are the alarm logs. The sizing of the search node depends on the following:

- Number of features enabled with logging

- Logging data volume and log rate

- Retention period of logged data

- Packet capture—You should enable packet capture and traffic monitoring only for traffic related to specific troubleshooting. Packet captures are not stored in the database, but rather as stored as PCAP files. If these files are not processed correctly, they can quickly fill up the disk space.

Feature such as firewall logging and traffic monitor logging (Netflow) have a significant impact on the overall scaling of the Analytics cluster. Therefore, it is recommended that you avoid creating wildcard logging rules. In response to an increases analytics load, you can scale both the Analytics and Search nodes horizontally. Versa Networks has a calculator tool that can help you size an Analytics cluster based on the information described in the next section.

Best Practices for Headend Design

A stable and fault-tolerant solution requires proper design of the headend. A proper design factors in the expected utilization in order to dimension the compute resources so that they can be horizontally scaled out when required. The headend design must be well thought out high availability design, to ensure that there is no single point of failures. Finally, the headend must be secured and hardened to avoid possible security vulnerabilities.

It is recommended that you consider the following when deploying a Versa Networks headend:

- Ensure that you configure DNS on the Director and Analytics nodes. DNS is used for operations such as downloading security packs (SPacks) an, on Analytics devices, for reverse lookup.

- Ensure that you configure NTP and synchronize it across all nodes in the SD-WAN network. It is recommended that you configure all nodes to be in the same time zone, to make log correlation between various components practical.

- Perform platform hardening procedures such as signed SSL certificates, password hardening, and SSL banners for CLI access.

- Ensure that you have installed the latest OS security pack (OS SPack) on all components and that you have installed the latest SPack on the Director node

- Ensure that the appropriate ports on any intermediate firewalls are open.

For more information, see the following articles:

For assistance with the design and validation of the headend before you move it into production, contact Versa Networks Professional Services.

Supported Software Information

Releases 20.2 and later support all content described in this article.

Branch Deployment Options

![]() For supported software information, click here.

For supported software information, click here.

The Versa Networks SD-WAN solution offers flexible and comprehensive branch deployment options. This article describes the most common transport-based branch deployment scenarios.

One of the benefits with Versa SD-WAN branch configuration is that each Versa Operating SystemTM (VOSTM) edge device provides the same feature capabilities, regardless of whether it is in a hub, spoke, or any other configuration. You can configure different topologies for different tenants on the same edge device, and at the same time.

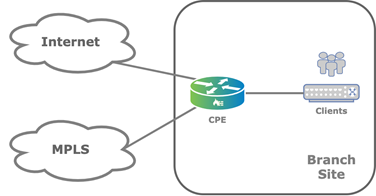

Branch with a Single CPE Device and Dual Transports

In the first branch deployment scenario, a customer branch site has a single CPE device that has two different WAN transport links. The following figure illustrates this scenario, showing that a dedicated MPLS connection and an internet connection terminate on the customer's CPE device.

In this topology, overlay tunnels between branches are formed through the MPLS or internet underlay. You configure this functionality using a Director Workflow function. You can use alternate underlays from different providers, such as LTE connections.

You can configure a single CPE branch to support a single organization (also called a tenant) or, as multitenant, to support multiple tenants. The edge device provides full segregation between organizations or tenants. To provide addition segmentation, each organization on the edge device uses virtual routing and forwarding (VRF).

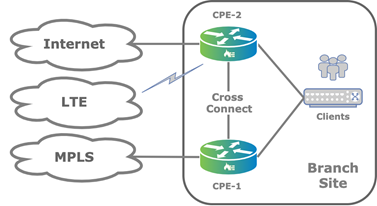

Branch with Active–Active Dual CPE Devices and Dual Transports

A variant of the scenario described in the previous section is a customer branch site that has two CPE devices in an active–active setup, instead of having a single CPE device, and that has two different WAN transport links, Here, the internet link connects to one of the CPE devices (CPE-1 in the figure below) and the MPLS link connects to the other (CPE-2 in the figure). The two paired CPE devices provide high availability (HA) and connect to each other using a cross-connect link.

On LAN side of the CPE device, the Virtual Router Redundancy Protocol (VRRP) provides gateway redundancy. If Layer 2 reachability on the LAN is not available, you can use Layer 3 routing protocols to reach the existing LAN side routers.

Each CPE device in the active–active HA pair maintains overlay connections to the WAN transports on the other CPE device. When the second underlay is physically attached to the other CPE device, it is logically represented on the local CPE device using a cross-connect link. In this deployment model, you can provision each CPE device with SD-WAN policies that leverage all the underlays.

This type of configuration is called active–active HA because both CPE devices always carry traffic to the underlay transport.

Note that state information, including NAT and session state, is not synchronized between the active–active HA CPE devices.

Best Practices for Single or Dual CPE Devices and Dual Transports

The following are best practices for branch deployments that consist of a single CPE device or two CPE devices in an active–active setup, and two WAN transports:

- For security, you should enable next-generation firewall (NGFW) on both CPE devices, because the NGFW and stateful firewall (SFW) software part of the LAN service chain, while the transport interfaces, including the cross-connect link, belong to the transport-VR domain.

- You should enable next-hop monitoring to upstream WAN transport gateway. Alternatively, you can enable a dynamic routing protocol.

- For this scenario, you can use the default Director Workflow for configuration.

Branch with Two CPE Devices and Three Transports

A third branch deployment scenario is a customer branch site has two CPE devices and three WAN links, as illustrated in the figure below. This is another form of the previous scenario, which has two CPE devices and two WAN transports.

The figure shows that the CPE-2 device is configured as a multihomed CPE device that has both internet and LTE connections. The second CPE device, CPE-1, connects only to the MPLS transport domain. You typically use this type of scenario when the LTE connection is required as a backup for the fixed transports.

When you deploy the branch CPE devices in active–active HA mode, the LAN side remains the same as described in Branch with Active–Active Dual CPE Devices and Dual Transports. This scenario also works for other combinations of WAN links, up to the maximum of 15 WAN links (for Releases 22.1.1 and later) or 8 WAN links (for Releases 21.2 and earlier) per tenant per device.

Note: Before you add more than 8 WAN links on any one VOS node that you are upgrading to Release 22.1.1, you must upgrade to Release 22.1.1 all the VOS nodes that communicate directly with the one running Release 22.1.1, including the Controller nodes.

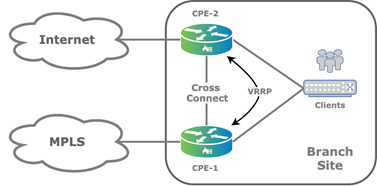

Branch HA

To implement HA at a customer branch site, the site must have two VOS edge devices. To provide branch redundancy, VOS devices support two modes of operation: active–active mode and active–standby mode.

Active–active mode, which is stateless, is the more commonly used HA mode. It is simple to deploy and provides better performance during standard operations, because both the VOS edge devices are able to process traffic at all times.

For active–standby mode, which is both stateless and stateful, both underlays for each CPE device are physically connected, and so the cross-connect link is not required. You can configure each CPE device as a standalone CPE device and use VRRP on the LAN side.

There are a few drawbacks to active–standby mode:

- Without the cross-connect, each CPE device cannot take advantage of both WAN transports. As a workaround, you can introduce a Layer 2 switch on the WAN side to allow each CPE device to have access to both WAN circuits.

- During a CPE device failover, stateful connections are lost and TCP sessions are re-established. While the user may not notice the re-establishment of the TCP session, the functioning of some devices may be affected.

In stateful active–standby HA mode, only the active CPE device can forward traffic.

Stateful active–standby HA mode maintains a stateful synchronization between the edge devices. You use this mode when state is important, such as for NGFW and CGNAT traffic.

The following table compares the HA modes.

| Stateless Active–Active Mode | Stateless Active–Standby Mode | Stateful Active–Standby Mode | |

|---|---|---|---|

| Overview |

|

|

|

| Use cases |

|

|

|

| Complexity |

|

|

|

| Available underlays |

|

|

|

| Performance |

|

|

|

| BGP, IPsec, SLA monitoring scalability and state |

|

|

|

| Convergence | |||

|

|

|

|

|

|

|

|

| Traffic restoration |

|

|

|

| Synchronized services between primary and secondary nodes |

|

|

|

| Node synchronized services |

|

|

|

Cross-Connects in an Active–Active HA Topology

The following figure shows an active–active branch HA topology that uses two WAN transport underlays but does not have the dual underlays on the CPE devices.

The cross-connect link is a physical connection between the redundant CPE devices that emulates the missing transport domain in a branch and provides redundancy to the attached clients, as illustrated in the following figure.

For the cross-connect link, you configure VLAN tagging for each WAN transport virtual router (VR) instance and you configure IP addresses configured using Workflow templates. Because the WAN transport VRs are distinct routing instances, they allow for reuse of IP addresses.

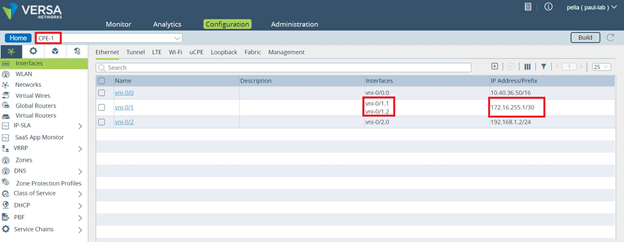

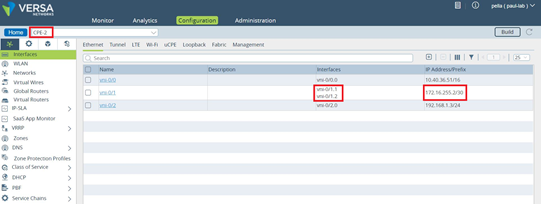

When you enable HA, by default, the back-to-back logical interfaces on the cross-connects are assigned IP addresses from the address range 172.16.255.0/30. The primary CPE device is assigned the address 172.16.255.1, and the second CPE device is assigned 172.16.255.2. Which CPE device is the primary or secondary is determined by which device uses the primary device template that is configured in the Director Workflow. The template for the secondary device is automatically generated by the Workflow.

The following screenshot shows a default HA interface configuration for the CPE-1 device. Notice that the assigned IP address is 172.16.255.1.

The following screenshot shows a default HA interface configuration for the CPE-2 device. Here, the assigned IP address is 172.16.255.2.

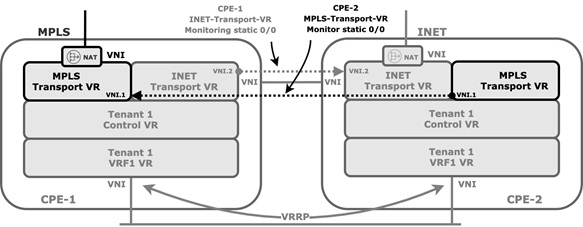

You configure static routes in the transport VR of each CPE device, and you use the Workflow to configure ICMP monitoring in the transport VR associated with the cross-connect link to direct traffic destined to the WAN connection of the paired CPE device. The following figure show static route ICMP monitoring with HA.

If the cross-connect interface fails over, ir the peer CPE device goes down, or if there is any other IP reachability issue over the cross-connect interface, the static route is withdrawn from the routing table of the corresponding WAN transport VR.

To configure ICMP monitoring on the CPE-1 device that connects to the MPLS transport VR from the CLI:

admin@CPE-1-cli> show configuration | display set | match icmp set routing-instances INET-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.1 none icmp set routing-instances INET-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.1 none icmp interval 5 set routing-instances INET-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.1 none icmp threshold 6

To configure ICMP monitoring on the CPE-2 device that connects to the internet transport-VR from the CLI:

admin@CPE-2-cli> show configuration | display set | match icmp set routing-instances MPLS-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.2 none icmp set routing-instances MPLS-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.2 none icmp interval 5 set routing-instances MPLS-Transport-VR routing-options static route 0.0.0.0/0 172.16.255.2 none icmp threshold 6

On the LAN side, you use VRRP to elect an active node and a standby node. The logical interface and its virtual IP address is used as the next hop or gateway on the LAN.

DIA in an Active–Active HA Topology

When you configure direct internet access (DIA) in an active–active HA scenario, there are a few things to note.

When you enable DIA, the Director Workflow automates the configuration of BGP peering between the transport VR and the LAN-VR, which is needed to propagate default route.

The ICMP monitoring is between the cross-connect logical interfaces of the CPE-1 internet transport VR and the CPE-2 device and therefore does not protect against internet WAN link failure on the CPE-2 device. This may result in a local internet black hole scenario if the internet WAN link fails on CPE-2, as show in the following figure.

To protect against the local black hole, you must take additional measures, such as monitoring the next next-hop (that is the remote next hop). Doing this may add complexity to the network design, because you may need to use NAT to allow the internet provider WAN interface to reply to ICMP echo requests. The NAT would be necessary because ICMP requests are sourced from the 172.16.255.0/30 prefix range and are not necessarily routed back by the provider router.

The recommended solution is to use dynamic routing over the cross-connect interface between transport VRs to propagate routes and the default route from main transport-VR of each CPE device.

Ports Used in a Branch Active–Active HA Topology

By default, each VOS branch device tries to send SD-WAN traffic using UDP port 4790 as both the source and destination port. However, for an active–active topology, this port cannot be used by the VOS device that is reachable over cross-connect links because active devices cannot both use the same port. However, when the traffic passes through the cross-connect, the source port is NATed to a random port in the range 1024 through 32000.

To change the range of ports used for NATing:

- In Director view:

- Select the Configuration tab in the top menu bar.

- Select Devices > Devices in the left menu bar.

- Select an organization in the left menu bar.

- Select a device in the main pane. The view changes to Appliance view.

- Select the Configuration tab.

- Select Services

> CGNAT in the left menu bar, select the Pools tab in the horizontal menu bar, and select the CGNAT pool.

> CGNAT in the left menu bar, select the Pools tab in the horizontal menu bar, and select the CGNAT pool.

- In the Edit CGNAT Pool popup window, select the Port tab.

- In the Allocation Scheme field, select Allocate Port from Range, and then enter the lowest and highest port numbers.

- Click OK.

The following figure illustrates how and where port translation occurs. In this example, for the Branch1A to reach the MPLS transport of the Branch2, it originates SD-WAN traffic from the source address 172.16.255.1:4790 and sends it to the destination address 8.7.6.5:4790. However, because Branch1B is already using 5.6.7.8:4790 as the source address for connections to Branch2, the VOS device performs a NAT translation of the traffic from Branch1A from 172.16.255.1:4790 to 5.6.7.8:1024 (or to a random port number in the range 1024 through 32000).

Supported Software Information

Releases 20.2 and later support all content described in this article, except:

- Release 22.1.1 supports up to a maximum of 15 WAN links per tenant per CPE device.

Additional Information

Configure Virtual Routers

Overview of Configuration Templates

LTE Transport Modes

![]() For supported software information, click here.

For supported software information, click here.

On Versa Operating SystemTM (VOSTM) edge devices, you can use LTE as a WAN link, and it acts just like any other WAN link. However, in many scenarios, LTE is deployed as a backup path because its data costs are higher. When you use LTE as a backup transport link, you can configure it in one of the following modes:

- Hot standby mode—In this mode, the LTE interface is up and SLA packets are sent over this link to remote sites to determine the path metrics, but the LTE link is not actively used for sending traffic.

- Cold standby mode—In this mode, you configure the LTE link and place it in an administratively Down state. The link state goes to Up only when all the primary wired WAN interfaces are down.

This article discusses the LTE hot standby and cold standby modes.

Hot Standby Mode

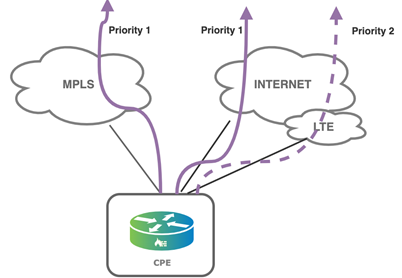

In hot standby mode, the LTE interface is up, and SLA packets are sent over this link to remote sites to determine the path metrics. However, the LTE link is used to send traffic only when the primary wired WAN links are down or out of SLA compliance. You can configure management traffic, such as LEF logging information or branch software uploads, to avoid the LTE link when other wired WAN links are available. The following figure illustrates the traffic priories in hot standby mode.

The following are the benefits of hot standby mode:

- When the primary wired WAN interfaces go down or are out of SLA compliance, the switchover to LTE occurs instantly.

- SLA-based steering is possible because the LTE link is actively monitored through SLA probes.

- The status and quality of the path is known at all times in standby mode. The LTE link generates alarms if the path becomes unavailable, prompting the administrator to take corrective action.

The following are the limitations of hot standby mode:

- The path through the LTE link is actively monitored. This means that SLA traffic is sent over the LTE link when it is in standby mode, thus consuming credits of the LTE data plan subscription.

- The amount of bandwidth used by the SLA traffic varies, depending on the number of network sites with which SLA peering is maintained. Also, the SLA probe interval influences the amount of data sent over the LTE link.

This section describes the following LTE hot standby configuration scenarios:

- Use LTE hot standby for SD-WAN VPN (site-to-site) traffic

- Use the LTE link as the backup circuit on local and remote devices

- Avoid the LTE link for non-business and scavenger traffic

- Use the LTE link as a backup for internet traffic

- Load-balance two wired WAN links, with an LTE backup

- Provide connectivity for management traffic

Configure LTE Hot Standby for SD-WAN VPN Traffic

This section discusses two scenarios for using LTE hot standby for SD-WAN VPN (site-to-site) traffic:

- Use LTE as backup circuit on local and remove device

- Avoid LTE for non-business and scavenger traffic

When you configure WAN links, a circuit media attribute is associated with each link. When you configure LTE hot standby for SD-WAN VPN (site-to-site) traffic, the circuit media can be any of the following:

- Cable

- DSL

- Ethernet

- LTE

- T1

- T3

Use LTE as Backup Circuit on the Local and Remote Branches

This section describes a scenario that requires the LTE links to be in hot standby mode. You should apply this configuration to all devices in the network, both those with LTE interfaces and those that do not have LTE interfaces.

In this scenario, the local branch decides which path to use to connect to the remote branch. This decision includes choosing the WAN circuits on the local and remote branches. In many networks, not all branches are deployed with LTE interfaces. A non-LTE branch must not use the path to the remote LTE interface if the remote wired internet interface is available.

The configuration shown here allows the local and the remote branches to use LTE only when needed.

The WAN link circuit media type is used to set the LTE link to be in hot standby mode. To verify whether the circuit media type is correct for all the WAN links:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Services

> SD-WAN > System > Site Configuration in the left menu bar.

> SD-WAN > System > Site Configuration in the left menu bar. - Click the

Edit icon. The Edit WAN Interfaces popup window displays.

Edit icon. The Edit WAN Interfaces popup window displays.

In the Media field, check that the media type is set to either Ethernet, DSL, or LTE, depending on the type of WAN link. The Ethernet interfaces are displayed as vni-0/0 and vni-0/1, and the LTE interfaces are displayed as vni-0/100 and vni-0/101.

To configure the LTE interface to be in hot standby mode, you set the circuit priority on the local and remote branches. Because the branch that initiates the traffic chooses the path, it is important that the local branch not prioritize the LTE link on the remote branch. This configuration applies when the sending branch does not have an LTE circuit, for example, to allow communication with a branch in a data center.

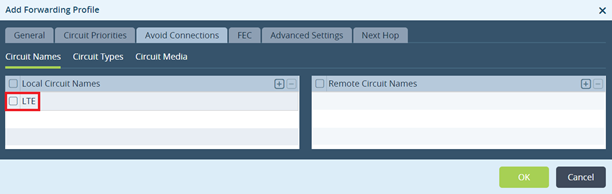

You configure the circuit priorities in the default SD-WAN forwarding profile. To configure the circuit priorities:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Configuration > Services

> SD-WAN > Forwarding Profiles.

> SD-WAN > Forwarding Profiles. - Click the

Add icon. The Add Forwarding Profile popup window displays.

Add icon. The Add Forwarding Profile popup window displays. - Select the Circuit Priorities tab.

- Click the

Add icon. The Add Circuit Priorities popup window displays.

Add icon. The Add Circuit Priorities popup window displays.

Set the circuit priorities as shown in the following table. Note that if the local and remote circuit media are other types of WAN links, such as E1 or T1, you can include them in the priority groups that contain Ethernet media. For circuit priorities, a lower value indicates a more preferred link and a higher value indicates a less preferred link.

| Priority | Purpose | Local Circuit Media | Remote Circuit Media |

|---|---|---|---|

| 1 | Use this circuit when local and remote wired WAN links are up | Ethernet, DSL | Ethernet, DSL |

| 2 | Use this circuit when the wired WAN links on the local branch are down and LTE is the only available WAN link | LTE | Ethernet, DSL |

| 3 | Use this circuit when the wired WAN links on the remote branch are down and LTE is the only available WAN link | Ethernet, DSL | LTE |

| 4 | Use this circuit when LTE is the only WAN link available on both the local and remote branches; you do not need to configure this priority |

Then you configure an SD-WAN policy rule to match the traffic and have it follow the WAN link priority order. To create an SD-WAN policy rule that matches all traffic (a wildcard match):

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Services

> SD-WAN > Policies in the left menu bar.

> SD-WAN > Policies in the left menu bar. - Select the Rules tab to create a new rule. For a wildcard catch all rule, do not configure any match conditions. Select the Enforce tab, in the Forwarding Profile field, select the SD-WAN forwarding profile that you created above, in this example called Default-FP.

Avoid LTE for Non-Business and Scavenger Traffic

You can configure the local circuit media or a combination of local and remote circuit media to completely avoid using LTE circuits for non-business critical traffic and scavenger traffic. (Scavenger traffic is a QoS category that includes suspect traffic that may be dangerous to the network.) To do this, when you configure an SD-WAN forwarding profile, you define the circuits to avoid.

This configuration is the same as that shown in the previous section. However, in the Avoid connections tab, you define the circuits to avoid. These circuits are never used even if the path is the only one that is available.

You then create an SD-WAN policy rule to classify the scavenger traffic and attach the rule to the forwarding policy that specifies to never use LTE.

Note that the forwarding profile configuration affects egress traffic decisions. For an effective implementation, you must apply this configuration uniformly across the network to prevent ingress traffic from arriving on the LTE link.

Configure LTE as a Backup for Internet Traffic

This section describes how to configure an LTE link to be a backup for local internet breakout (DIA) traffic. The scenarios in this section have either one wired link and one LTE link, or more than one wired link and one LTE link.

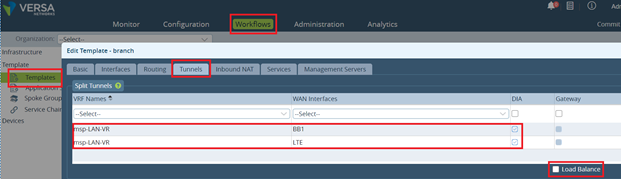

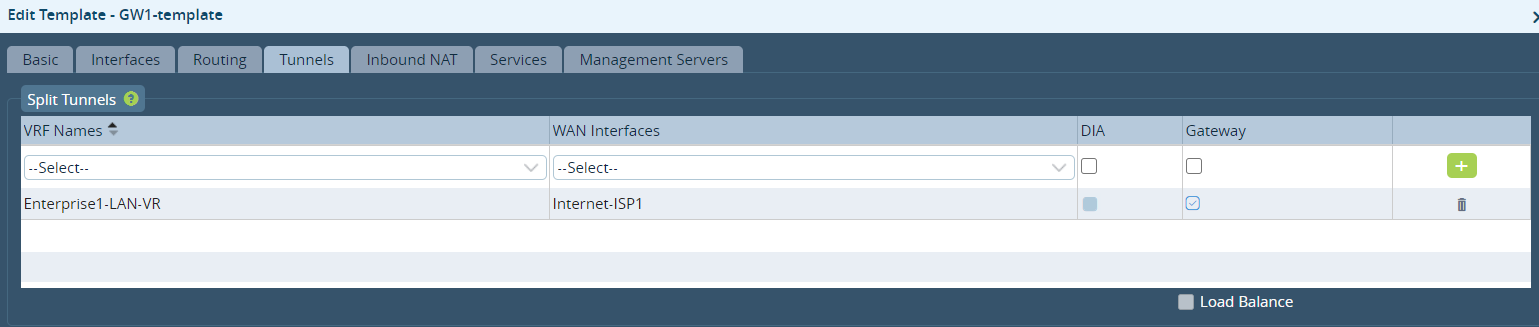

To use LTE as a hot standby for direct internet access (DIA) traffic, in the Workflows template, in the Tunnels tab, do not click the Load Balance option.

The Workflows template automatically creates two BGP sessions over a virtual interface pair that run between the WAN transport VR and the MSP LAN VR. The default route is advertised from each of the WAN transport VRs to the LAN VR. The default route from the BB1 transport VR has a BGP local preference value of 120 by default, and the default route from the LTE transport VR has a BGP local preference value of 119. Based on the local preference values, the LAN VR installs the default route from the BB1 transport VR as the active default route, thus making BB1 WAN link the primary WAN link for local internet-bound traffic.

To load-balance between two wired WAN links for DIA, while having an LTE link as a hot-standby backup, you enable DIA on all internet WAN and check the load balance knob. This configuration sets all WAN links to the same local preference.

Because you select the load balance option, all the default routes from the BB1, BB2, and LTE transport VRs are advertised to the LAN VR with a BGP local preference value of 120. The result is that traffic is load-balanced across all the three WAN links. To have the LTE link be the backup and be active only when the two wired WAN links are down, modify the BGP local preference of the default route advertised by the LTE VR to a value of 119 or lower.

To modify the local preference value:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Networking > Virtual Routers in the left menu bar, and select a virtual router instance. The Virtual Router popup window displays.

- Select the BGP tab in the left menu bar. The main pane displays a list of the BGP instances that are already configured.

- Select the BGP instance for the LTE link. The Edit BGP Instance popup window displays.

- Select the Peer/Group Policy tab. The Add Add Peer/Group Policy popup window displays.

- Select the Action tab, and change the local preference value to a value of 119 or lower.

Minimize the Sending of Management Traffic on LTE Links

By default, the VOS device randomly assigns an available link to provide connectivity to the Controller nodes and Versa Networks headend devices. This means that the LTE link could be used to send management traffic. Management traffic is all traffic towards the Controller and headend devices, including LEF logging information, which is sent to the Analytics node, or uploading software image files.

To prevent the unnecessary use of the LTE data plan, you configure management traffic so that the preferred links are the wired WAN links. To do this, you set the management priority of the LTE WAN links to a value that is lower than that of the wired WAN links. For management priorities, a value 0 indicates the highest priority and a value 15 is the lowest.

To set the management traffic priority to minimize the use of LTE links for management traffic:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Services

> SD-WAN > Site in the left menu bar. The following screen displays.

> SD-WAN > Site in the left menu bar. The following screen displays.

- Click the Edit icon in the main pane. The Edit Site popup window displays.

- In the WAN Interfaces table, select the LTE interface. The Edit WAN Interfaces popup window displays.

- In the Management Traffic > Priority field, enter a value of 15. Note that for management priorities, a value 0 indicates the highest priority and a value 15 is the lowest.

- Click OK.

Cold Standby Mode

In cold standby mode, you configure the LTE link in an administratively Down state. Only when all the primary wired WAN interfaces are down does the LTE link state change to Up.

The primary benefits of cold standby mode is that no data is used on the LTE link until all the primary WAN links are down.

The following are the limitations of cold standby mode:

- The VOS edge device does not know the actual status of the LTE connection until the primary WAN links go down and the VOS devices tries to bring up the LTE connection.

- The traffic failover is not instantaneous, because there is lag time while registering the SIM to the mobile network. Data transfer can begin only after the mobile data context is enabled on the SIM and the Versa Networks control plane is established on that path.

To configure LTE cold standby mode, you configure the LTE interface to be in an administratively Down state, and you configure an active wired interface that has an active monitor (an IP SLA monitor) to the active interface's next hop. When the IP SLA monitor determines that the next hop is no longer reachable, the LTE link is activated automatically and starts building neighbor sessions with all its peers in the transport domain.

To configure cold standby mode, you do the following:

- Create a monitor for the primary WAN circuit.

- Create monitor-group to check the status of the primary links.

- Associate the monitor group to the LTE interface as a standby.

To create a primary WAN interface:

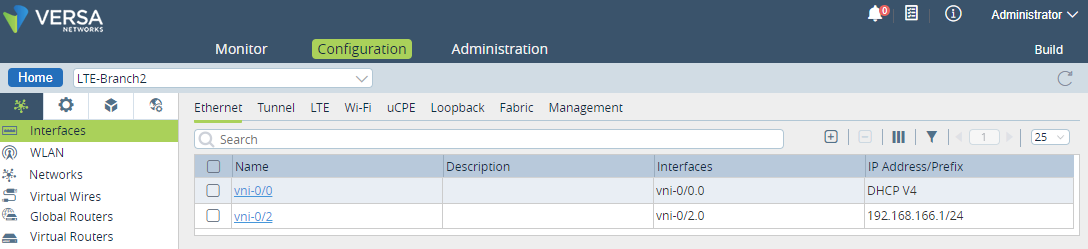

- In Director view, select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select a device in the main pane. In the following screenshot the device is LTE-Branch2.

- Select the Configuration tab in the top menu bar.

- Select Network

> Interfaces in the left menu bar.

> Interfaces in the left menu bar. - Click the

Add icon to create an interface. This example configures vni-0/00 as the primary link.

Add icon to create an interface. This example configures vni-0/00 as the primary link.

- Select Network

> IP SLA > Monitor in the left menu bar.

> IP SLA > Monitor in the left menu bar. - Click the

Add icon to create a monitor for the primary WAN interface. The Add IP SLA Monitor popup window displays. Enter information for the following fields.

Add icon to create a monitor for the primary WAN interface. The Add IP SLA Monitor popup window displays. Enter information for the following fields.

Field Description Name Enter a name for the IP SLA monitor object. This example uses the name Broadband-monitor. Interval Click, and enter the frequency, in seconds, at which to send ICMP packets to the IP address.

Range: 1 through 60 seconds

Default: 3 seconds

Threshold Enter the maximum number of ICMP packets to send to the IP address. If the IP address does not respond after this number of packets, the monitor object, and hence the IP address, is marked as down.

Range: 1 through 60

Default: 5

Monitor Type Select the type of packets sent to the IP address. The available options are DNS, ICMP, or TCP. Monitor Subtype Select the subtype:

- HA probe type—Select to avoid interchassis HA split brain. For more information, see Configure Interchassis HA.

- Layer 2 loopback type—Select to monitor an external service node configured as a Layer 2 loopback (virtual wire).

- No subtype—Do not use a monitor subtype. This is the default.

Default: No subtype

Source Interface Select the source interface on which to send the probe packets. This interface determines the routing instance through which to send the probe packets. This routing instance is the target routing instance for the probe packets. IP Address Enter the IP address to monitor. - Click OK.

To create a monitor group and add the monitor object:

- Continuing from the previous procedure, select Network

> IP SLA > Group in the left menu bar.

> IP SLA > Group in the left menu bar. - Click the

Add icon to create a monitor group. The Add IP SLA Monitor Group popup window displays. Enter information for the following fields.

Add icon to create a monitor group. The Add IP SLA Monitor Group popup window displays. Enter information for the following fields.

Field Description Name Enter a name for the IP SLA monitor group. This example uses the name Broadband-Group. Operation Select the boolean operation to perform on the monitors:

- AND—In an AND operation, the monitor group result is Up only if all monitors are Up. Otherwise, the monitor group result is Down.

- OR—In an OR operation, the monitor group result is Up if at least one of the monitors is Up. It is down only if all monitors are Down.

List of Monitors (Table) - Available

Displays the list of available monitors for this appliance. Select and click on the monitor that you want to add to the group, here, Broadband-monitor. - Selected

Displays the monitor that you added to the group. - Click OK.

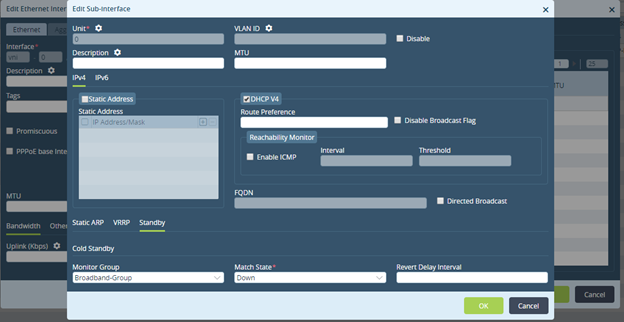

Next, you associate the monitor group (Broadband-Group) with an LTE interface as the standby option, with the match state configured as "down". To configure this use case scenario:

- Continuing from the previous procedure, select Network

> Interfaces in the left menu bar.

> Interfaces in the left menu bar. - Select the LTE link (vni-0/100) in the main pane. The Edit Ethernet Interface popup window displays.

- Select Subinterfaces

- Select the Standby tab and enter information for the following fields.

Field Description Monitor Group Select the monitor group, here, Broadband-Group. Match State Select "down."

- Click OK.

To verify the interface status:

- In Director view, select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the LTE-Branch2 device in the main pane. The view changes to Appliance view.

- Select the Monitor tab in the top menu bar. The main pane displays the monitor dashboard for the LTE-Branch2 device.

- Check the operational status of the branch's interfaces. If all the primary links are Up, the backup LTE interface should show a Down. status. If all the primary links are down, the backup LTE link status should show as up.

Comparison of LTE Hot Standby Mode and Cold Standby Mode

The following table compares hot standby mode and cold standby mode.

| Hot Standby Mode | Cold Standby Mode | |

|---|---|---|

| LTE link status | Up | Down |

| Egress data traffic | Only SLA traffic | None |

| Ingress data traffic | SLA traffic but possibly also data traffic depending on the remote device configuration | None |

| Traffic failover to standby mode | Instantaneous | Not Instantaneous |

| Alarm generated when LTE link is down | Yes | No |

| Can be used for traffic when primary path SLA's are violated | Yes | No |

| Can use LTE as standby for selective traffic classes | Yes | No |

Supported Software Information

Releases 20.2 and later support all content described in this article.

Additional Information

Configure IP SLA Monitor Objects

Configure LTE

Configure SD-WAN Traffic Steering

Configure Virtual Routers

SD-WAN Overlay Networks

![]() For supported software information, click here.

For supported software information, click here.

The Versa Networks SD-WAN is based on overlay tunnels, and all traffic traveling through the tunnels is encrypted. In the SD-WAN overlay design you need to consider the IP addressing scheme for the overlay network and whether you want all data traffic to follow the encrypted overlay.

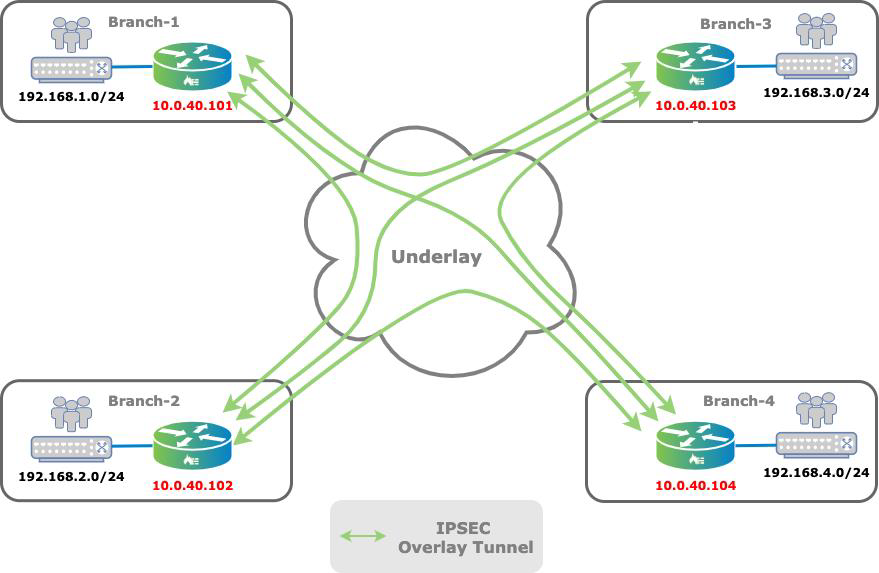

The following figure shows the topology of an SD-WAN overlay network, to illustrate the overlay and underlay networks. The network consists of a data center two single-homed remote branches that are managed by a Versa Networks headend, which consists of Director, Analytics and Controller node. All sites and the headend are connected to all the available transport networks.

Hubs and branches connect to the Controller node, which serves as an attachment point for the management plane and control plane. SD-WAN overlay topologies are built through the exchange of MP-BGP NLRI communities in combination with import and export policies, and they provide the flexibility to create multiple topologies, using Director Workflows, without any restrictions.

Overlay IP Addressing

The Versa Networks SD-WAN is based on overlay tunnels, which are used to abstract the underlay networks. By default, two overlay networks are built between the branches:

- Encrypted overlay, which uses an IPsec tunnel

- Plain-text overlay, which uses a VXLAN tunnel

For more information about the tunnels used for overlay networks, see Secure Control and Data Overlay Tunnel Solution.

The addresses for the SD-WAN overlay tunnels follow a specific overlay IP addressing scheme. In principle, the overlay network is routable in the SD-WAN control network, that is, between both the Controller nodes and the branches, and the control network southbound of the Director node (or northbound of the SD-WAN Controller node), as illustrated in the following figure.

However, in some deployments, you must choose what to include in the overlay IP addressing scheme when you are integration with an OSS/BSS. For example, the control network must have IP address reachability between VOS edge devices and services such as TACACS+, RADIUS, syslog collectors, and third-party VNF managers. For more information, see Configure the Overlay Addressing Scheme

The following are best practices for overlay IP addressing:

- Configure the overlay IP addressing method and pool when you initially set up the Versa Director. You cannot modify the method later. Ensure that you choose the correct method and that you allocate a large enough subnet to cover the expected size of the deployment.

- It is recommended that you use the “do not encode” option to optimizes the use of IP addressing space.

Encrypted and Clear-Text Overlay

By default, all data traffic follows the encrypted overlay, as illustrated in the following figure.

If you need to change the default tunnel used for data transport, you can do so in one of the following ways:

- Statically configuring encrypted or clear-text transmission of data per WAN interface

- Dynamically setting the transmission mode by configuring an SD-WAN policy

Note that if you configure both WAN interface static definition and SD-WAN policy, the SD-WAN policy takes precedence.

Define Per-Interface Encryption Statically

You can statically define the encryption method per WAN interface if the underlay is a private or secured circuit such as an MPLS service provided as part of a service provider Layer 3 VPN service. Traditionally, network administrators have considered these private Layer 3 VPNs as secured and did not typically encrypt data over it. In a similar manner, you can consider the MPLS Layer 3 VPN as secured, and so data can be transported using the clear-text tunnel.

A benefit of clear-text transport it that it does not use IPsec overhead on the platform

To configure static definition per WAN interface:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Services

> SD-WAN > Site in the left menu bar.

> SD-WAN > Site in the left menu bar. - In the Site pane, click the

Edit icon.

Edit icon. - In the WAN Interfaces tab, select a WAN interface. The Edit WAN Interfaces popup window displays.

- In the Encryption field, select the desired encryption for the interface:

- Always—Encrypt all traffic.

- Never—Do not encrypt traffic.

- Optional—Encryption is optional.

- Click OK.

For more information, see Configure Encryption on WAN Interfaces.

Use SD-WAN Policy To Dynamically Define Encryption

In some scenarios, you need to dynamically turn off encryption for some traffic, for example, for traffic that is already encrypted by an application (such as HTTPS or TLS/SSL secured application data) and traffic that is of no interest, from a security point of view, to the enterprise (such as recreational traffic from Facebook and YouTube). To turn off encryption for these types of traffic, you configure an SD-WAN forwarding profile, in which you set the desired encryption, and then you associate the forwarding with an SD-WAN policy that identifies the traffic to match.

To use SD-WAN policy to dynamically define encryption:

- In Appliance view, select the Configuration tab in the top menu bar.

- Select Services

> SD-WAN > Forwarding Profiles in the left menu bar.

> SD-WAN > Forwarding Profiles in the left menu bar. - Click the

Add icon. The Edit Forwarding Profile popup window displays.

Add icon. The Edit Forwarding Profile popup window displays.

- In the Encryption field, select the desired encryption.

For more information, see Configure SD-WAN Traffic-Steering.

Supported Software Information

Releases 20.2 and later support all content described in this article.

Additional Information

Configure Encryption on WAN Interfaces

Configure SD-WAN Traffic-Steering

SD-WAN Topologies

![]() For supported software information, click here.

For supported software information, click here.

The Versa Networks solution supports the following SD-WAN overlay topologies:

- Full mesh

- Hub and spoke

- Regional mesh

- Multi-VRF, or multitenancy

These topologies are established by using that well-known routing techniques that have been used for a long time in MPLS Layer 3 VPN networks. They use MP-BGP communities to achieve fine-grained route control and to provide flexible options for manipulating and fine-tuning routes. You can use Director Workflows to create these topologies, thus simplifying these complex configurations.

Full-Mesh Topology

You use a full-mesh topology for any-to-any communication. In this type of topology, branches communicate directly using overlay tunnels, and traffic does not need to transit through a hub or centralized site. The following figure illustrates a full-mesh topology.

A full-mesh topology is generally the preferred topology when branches must communicate directly with each other. Typically, you choose a full-mesh topology over a hub-and-spoke topology for voice applications, because a hub-and-spoke topology introduces delay when the hub is distant from the branches. Another instance in which a full-mesh topology is preferred over hub and spoke is a distributed security architecture, where policy enforcement is performed at the branch. Here, the full-mesh topology avoids the need to funnel traffic to hub sites for inspection.

In Director Workflows, the full-mesh topology is the default option.

In a full-mesh topology SLA monitoring probes are sent to every remote branch on every available transport. SLA monitoring probes are used to track reachability and to measure link metrics for each access circuit towards any given remote site. You can use SLA optimization features such as adaptive SLA and data-driven SLA to optimize the SLA load in large deployments.To verify SLA monitoring status, issue the following CLI command:

admin@Branch-1-cli> show orgs org Tenant1 sd-wan sla-monitor status

LOCAL REMOTE

WAN WAN

PATH FWD LOCAL REMOTE LINK LINK ADAPTIVE DAMP DAMP CONN LAST

SITE NAME HANDLE CLASS WAN LINK WAN LINK ID ID MONITORING STATE FLAPS STATE FLAPS FLAPPED

------------------------------------------------------------------------------------------------------------------------

Branch-2 6689028 fc_ef MPLS MPLS 1 1 active disable 0 up 1 00:06:26

6693380 fc_ef Internet Internet 2 2 active disable 0 up 1 00:06:26

Branch-3 6754564 fc_ef MPLS MPLS 1 1 active disable 0 up 1 00:06:32

6758916 fc_ef Internet Internet 2 2 active disable 0 up 1 00:06:31

Branch-4 6820100 fc_ef MPLS MPLS 1 1 active disable 0 up 2 00:05:45

6824452 fc_ef Internet Internet 2 2 active disable 0 up 2 00:05:44

Controller-1 69888 fc_nc MPLS MPLS 1 1 disable disable 0 up 1 00:16:38

74240 fc_nc Internet Internet 2 2 disable disable 0 up 1 00:16:38

The output above shows the SLA monitoring view from Branch-1, which has internet and MPLS transports towards all branches and towards the Controller node.

In a full-mesh topology, you must determine the proper scaling of the maximum number of branches. To dimension the deployment, you must consider many variables, including the following:

- Number of WAN links

- Number of tenants

- Forwarding classes being monitored

- SLA monitor interval

- Branch hardware

- Bandwidth to the branch

For example, in a full-mesh topology with 1000 branches that have one tenant per site and two WAN links in different transport domains, if you use the Versa Operating SystemTM (VOSTM) device default SLA monitoring configuration, the SLA probe traffic consumes 6.25 Mbps of bandwidth at each site. Increasing the number of branch CPE devices increases both the bandwidth usage and the CPU overhead to perform SLA monitoring. You can limit the SLA monitoring traffic to lower link utilization, for instance, on high-cost links such as LTE connections. For more information, see Configure SLA Monitoring for SD-WAN Traffic Steering.

In a full-mesh topology, there is direct reachability to prefixes in remote branches. Traffic is routed to those prefixes using the next hop of the remote branch loopback (TVI) interfaces. If there is an underlay cut or the SLA probing cannot declare the remote branch to be reachable, the SLA monitoring session is down and therefore the next hop is not reachable. The result is that this prefix is withdrawn from the routing table and thus it is not displayed in the output of the show route command. Note that if the route is not directly reachable, perhaps because it is reachable through a hub, the route has the interface name "indirect." The route table below illustrates that for the Branch-1 VRF, three of the are indirect routes.

admin@Branch-1-cli> show route routing-instance Tenant1-LAN-VR Routes for Routing instance : Tenant1-LAN-VR AFI: ipv4 SAFI: unicast Codes: E1 - OSPF external type 1, E2 - OSPF external type 2 IA - inter area, iA - intra area, L1 - IS-IS level-1, L2 - IS-IS level-2 N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2 RTI - Learnt from another routing-instance + - Active Route Prot Type Dest Address/Mask Next-hop Age Interface name ---- ---- ----------------- -------- --- -------------- BGP N/A +0.0.0.0/0 169.254.0.2 1w6d20h tvi-0/603.0 conn N/A +169.254.0.2/31 0.0.0.0 1w6d20h tvi-0/603.0 local N/A +169.254.0.3/32 0.0.0.0 1w6d20h directly connected conn N/A +192.168.1.0/24 0.0.0.0 1w6d20h vni-0/2.0 local N/A +192.168.1.1/32 0.0.0.0 1w6d20h directly connected BGP N/A +192.168.2.0/24 10.0.40.102 1w6d20h Indirect BGP N/A +192.168.3.0/24 10.0.40.103 1w6d20h Indirect BGP N/A +192.168.4.0/24 10.0.40.104 00:05:58 Indirect

Hub-and-Spoke Topology

The Versa Networks SD-WAN solution supports different types of hub-and-spoke topologies:

- Spoke to hub only

- Spoke to spoke through a hub (spoke to spoke through another SD-WAN edge device)

- Spoke to spoke direct

- Spoke-hub-hub-spoke

Spoke-to-Hub Only

In a spoke-to-hub-only topology, the only prefixes advertised, by default, are hub routes, and spokes routes are not re-advertised by the hub branch. You use this topology when spokes do not have to communicate with each other. A good example is a network of ATM cash machines in which devices communicate exclusively with resources in the customer data center. The following figures shows that spoke prefixes are accepted only by the hub and that they are rejected by other spokes based on the BGP community configuration.

The following CLI output shows that the spoke Branch-1 VRF route table contains routes only from the hub:

admin@Branch-1-cli> show route routing-instance Tenant1-LAN-VR Routes for Routing instance : Tenant1-LAN-VR AFI: ipv4 SAFI: unicast Codes: E1 - OSPF external type 1, E2 - OSPF external type 2 IA - inter area, iA - intra area, L1 - IS-IS level-1, L2 - IS-IS level-2 N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2 RTI - Learnt from another routing-instance + - Active Route Prot Type Dest Address/Mask Next-hop Age Interface name ---- ---- ----------------- -------- --- -------------- conn N/A +192.168.1.0/24 0.0.0.0 2d20h04m vni-0/2.0 local N/A +192.168.1.1/32 0.0.0.0 2d20h04m directly connected BGP N/A +192.168.10.0/24 10.0.40.110 2d20h04m Indirect BGP N/A 192.168.10.0/24 10.0.40.111 2d20h04m Indirect

The route table on spoke Branch-1 shows only destinations behind hubs, again with Hub-1 being preferred, and the table shows no spokes routes. The following output shows the prefixes advertised by spoke Branch-1:

admin@Branch-1-cli> show route table l3vpn.ipv4.unicast advertising-protocol bgp

Routes for Routing instance : Tenant1-Control-VR AFI: ipv4 SAFI: unicast

Routing entry for 192.168.1.0/24

Peer Address : 10.0.40.1

Route Distinguisher : 2L:2

Next-hop : 10.0.40.101

VPN Label : 24704

Local Preference : 110

A S Path : N/A

Origin : Igp

MED : 0

Community : [ 8000:2 8001:110 8002:111 ]

Extended community : [ target:2L:2 ]

You use BGP import policies to filter spoke routes. For example, using spoke community 8000:2 filters out spoke routes on hubs in the LAN-VR-Export VR, and these routes are not advertised back to the spokes. Therefore, the hub route tables contains all spoke prefixes:

admin@Hub-1-cli> show route routing-instance Tenant1-LAN-VR Routes for Routing instance : Tenant1-LAN-VR AFI: ipv4 SAFI: unicast Codes: E1 - OSPF external type 1, E2 - OSPF external type 2 IA - inter area, iA - intra area, L1 - IS-IS level-1, L2 - IS-IS level-2 N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2 RTI - Learnt from another routing-instance + - Active Route Prot Type Dest Address/Mask Next-hop Age Interface name ---- ---- ----------------- -------- --- -------------- BGP N/A +192.168.1.0/24 10.0.40.101 00:21:24 Indirect BGP N/A +192.168.2.0/24 10.0.40.102 00:21:27 Indirect BGP N/A +192.168.3.0/24 10.0.40.103 00:21:23 Indirect BGP N/A +192.168.4.0/24 10.0.40.104 00:21:26 Indirect BGP N/A 192.168.10.0/24 10.0.40.111 00:36:45 Indirect conn N/A +192.168.10.0/24 0.0.0.0 00:51:13 vni-0/2.0 local N/A +192.168.10.1/32 0.0.0.0 00:51:13 directly connected

On hubs, all spokes prefixes are installed in the corresponding VRF. You can implement redistribution policy on hubs to perform route summarization or to generate default a static route, for instance, to attract traffic from spokes.

Spoke-to-Spoke via Hub (Spoke-to-Spoke Through Another SD-WAN Edge Device)

In a spoke-to-spoke via hub topology (spoke-to-spoke through another SD-WAN edge device), spoke sites are connected to each other through hub site. The data path between two spokes travels through the hub. The following figure illustrates this topology.

You can use the spoke-to-spoke through a hub topology when communication between branches is not required, for example, when security and other services are centralized at the hub site or when the cost of ownership of WAN links dictates it.

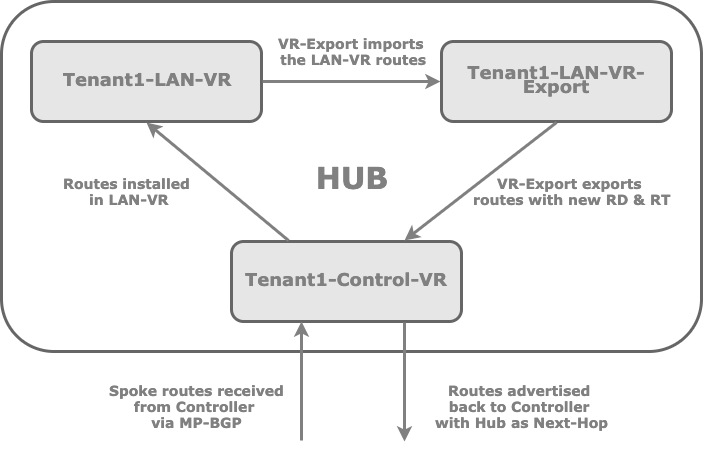

The following figure shows the method that hub sites use manipulate VRF spoke routes. With this method, you change the route distinguisher on the hub for a set of VRF routes that you are advertising so that the Controller nodes can separate the routes and accept them during BGP route selection. The Controller nodes have the original routes from the spokes and the spoke routes advertised by the hubs, and they reflects them to the branches. You use route-target filtering on the spokes to perform the remainder of the route selection. With route-target filter, you import the hub-advertised spoke routes, and the Controller nodes use these routes to select the hub as the next hop towards the remote sites.

In a spoke-to-spoke via hub topology, the branches communicate only through the hub. The IP prefixes of remote branches always have the hub as the next hop.

SLA monitoring is active only on paths towards hub sites and Controller nodes, and spoke sites are not monitored. This reduces the amount of SLA probe traffic compared to a full-mesh topology and addresses the concerns of scalability in deployments that have a large number of branches.

The following CLI output shows the SLA monitoring view on Branch-1:

admin@Branch-1-cli> show orgs org Tenant1 sd-wan sla-monitor status

LOCAL REMOTE

WAN WAN

PATH FWD LOCAL REMOTE LINK LINK ADAPTIVE DAMP DAMP CONN LAST

SITE NAME HANDLE CLASS WAN LINK WAN LINK ID ID MONITORING STATE FLAPS STATE FLAPS FLAPPED

-----------------------------------------------------------------------------------------------------------------------------------------

Controller-1 69888 fc_nc MPLS MPLS 1 1 disable disable 0 up 1 3d01h39m

74240 fc_nc Internet Internet 2 2 disable disable 0 up 1 3d01h39m

Hub-1 7213316 fc_ef MPLS MPLS 1 1 suspend disable 0 up 1 2d21h02m

7217668 fc_ef Internet Internet 2 2 suspend disable 0 up 1 2d21h02m

Hub-2 7278852 fc_ef MPLS MPLS 1 1 suspend disable 0 up 1 2d21h00m

7283204 fc_ef Internet Internet 2 2 suspend disable 0 up 1 2d21h00m

Because the hub nodes re-advertise the spoke branch prefixes, the spoke branches learn all the spoke prefixes. The next-hop IP address for the spoke branches is the hub's loopback TVI address.

The following output from the Branch-1 VRF routes table shows a deployment with two hubs. The hub that you configure with a higher priority is the one that maintains the active route

admin@Branch-1-cli> show route routing-instance Tenant1-LAN-VR Routes for Routing instance : Tenant1-LAN-VR AFI: ipv4 SAFI: unicast Codes: E1 - OSPF external type 1, E2 - OSPF external type 2 IA - inter area, iA - intra area, L1 - IS-IS level-1, L2 - IS-IS level-2 N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2 RTI - Learnt from another routing-instance + - Active Route Prot Type Dest Address/Mask Next-hop Age Interface name ---- ---- ----------------- -------- --- -------------- conn N/A +192.168.1.0/24 0.0.0.0 1d23h16m vni-0/2.0 local N/A +192.168.1.1/32 0.0.0.0 1d23h16m directly connected BGP N/A +192.168.2.0/24 10.0.40.110 03:26:28 Indirect BGP N/A 192.168.2.0/24 10.0.40.111 03:26:28 Indirect BGP N/A +192.168.3.0/24 10.0.40.110 1d23h16m Indirect BGP N/A 192.168.3.0/24 10.0.40.111 1d23h16m Indirect BGP N/A +192.168.4.0/24 10.0.40.110 1d23h16m Indirect BGP N/A 192.168.4.0/24 10.0.40.111 1d23h16m Indirect BGP N/A +192.168.10.0/24 10.0.40.110 1d23h16m Indirect BGP N/A 192.168.10.0/24 10.0.40.111 1d23h16m Indirect

The following CLI output shows an example of the spoke routes advertised by Branch-1:

admin@Branch-1-cli> show route table l3vpn.ipv4.unicast advertising-protocol bgp

Routes for Routing instance : Tenant1-Control-VR AFI: ipv4 SAFI: unicast

Routing entry for 192.168.1.0/24

Peer Address : 10.0.40.1

Route Distinguisher : 2L:2

Next-hop : 10.0.40.101

VPN Label : 24704

Local Preference : 110

AS Path : N/A

Origin : Igp

MED : 0

Community : [ 8000:1 8001:110 8002:111 ]

Extended community : [ target:2L:2 ]

The community string 8000:1 marks the spokes routes so that the BGP import policy on the spokes can identify them, and in this case, it rejects routes starting with this community string. The following CLI output is an example of a spoke route advertised by Hub-1:

admin@Hub-1-cli> show route table l3vpn.ipv4.unicast advertising-protocol bgp

Routing entry for 192.168.2.0/24

Peer Address : 10.0.40.1

Route Distinguisher : 16002L:110

Next-hop : 10.0.40.110

VPN Label : 24705

Local Preference : 100

AS Path : N/A

Origin : Incomplete

MED : 0

Community : [ 8000:0 8000:1 8001:110 8002:111 8009:8009 ]

Extended community : [ target:16002L:0 target:16002L:110 ]

The community string 8000:0 marks the spokes routes advertised by hubs so that the BGP import policy can identify them, and in this case, it accepts these routes, which have the hub as the next hop.

The community string 8009:8010, which you can see in the diagram above for Hub-1 (192.168.10.0/24), marks the direct LAN route from hubs, which the BGP import policy also accepts.

In this topology, Hub-1 is configured with a higher priority than Hub-2. This configuration explains why there are two entries in the route table for each prefix and why Hub-1 is the preferred next hop. Having two hubs provides redundancy, because Hub-2 is used when Hub-1 is not reachable.

The BGP import policy uses the extended-community attribute to accept routes from the hubs and to set a higher local preference for Hub-1. The extended target string 16002L:110 is derived from site ID 110, which is Hub-1.

Spoke-to-Spoke Direct and Partial Mesh

In a partial-mesh topology, some nodes are directly attached to each other, while other nodes are attached only to one or two nodes. You can select this topology when there are geographically dispersed sites in the same region that you want to communicate directly with each other, and when you want inter-regional traffic to transit through hub branches or when there is a high level of traffic exchanged between specific sites. The following figure illustrates a partial-mesh topology.

For a spoke-to-spoke–direct topology, you use spoke groups. Branches within the same spoke group can communicate directly with each other, and they use hubs to reach branches in different spoke groups. In the topology shown above, Branch-1 and Branch-2 communicate with each other directly, but to reach Branch-3 the next hop is Hub-1. The hubs are connected using a full-mesh topology.

It is recommended that you deploy a spoke-to-spoke–direct topology whenever feasible, so that you can use spoke groups to provide redundancy and flexible meshing of branches.

The following CLI output shows the SLA monitoring view on Branch-1:

admin@Branch-1-cli> show orgs org Tenant1 sd-wan sla-monitor status

LOCAL REMOTE

WAN WAN

PATH FWD LOCAL REMOTE LINK LINK ADAPTIVE DAMP DAMP CONN LAST

SITE NAME HANDLE CLASS WAN LINK WAN LINK ID ID MONITORING STATE FLAPS STATE FLAPS FLAPPED

------------------------------------------------------------------------------------------------------------------

Branch-2 6689028 fc_ef MPLS MPLS 1 1 active disable 0 up 1 00:04:59

6693380 fc_ef Internet Internet 2 2 active disable 0 up 1 00:04:59

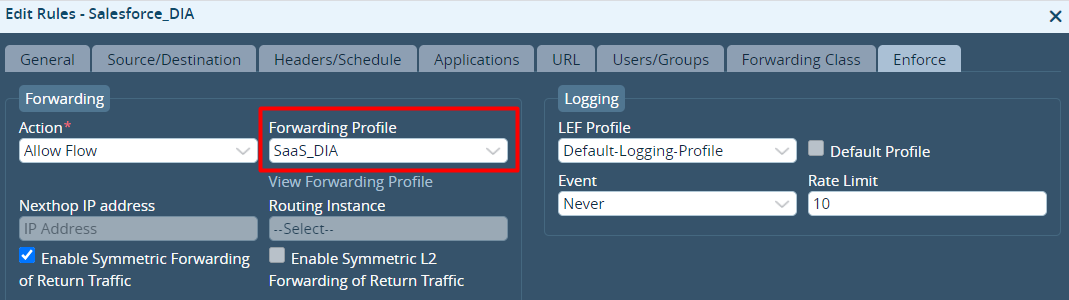

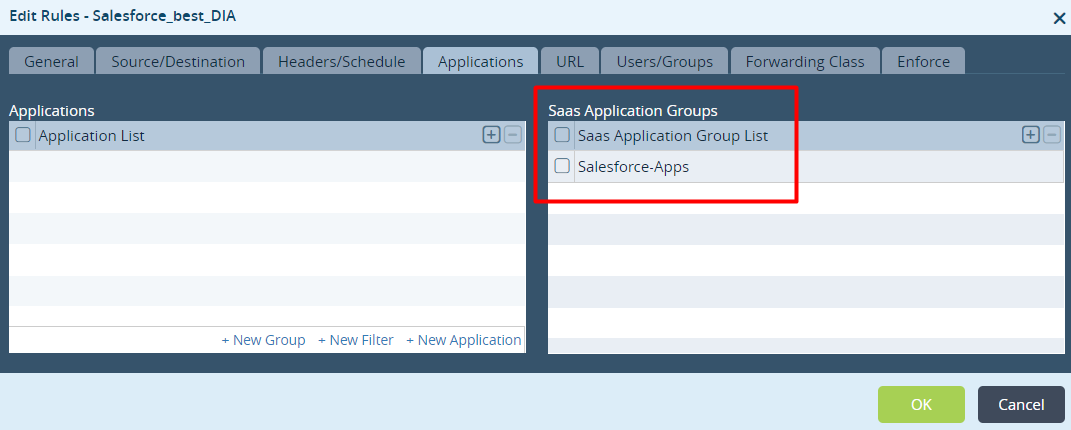

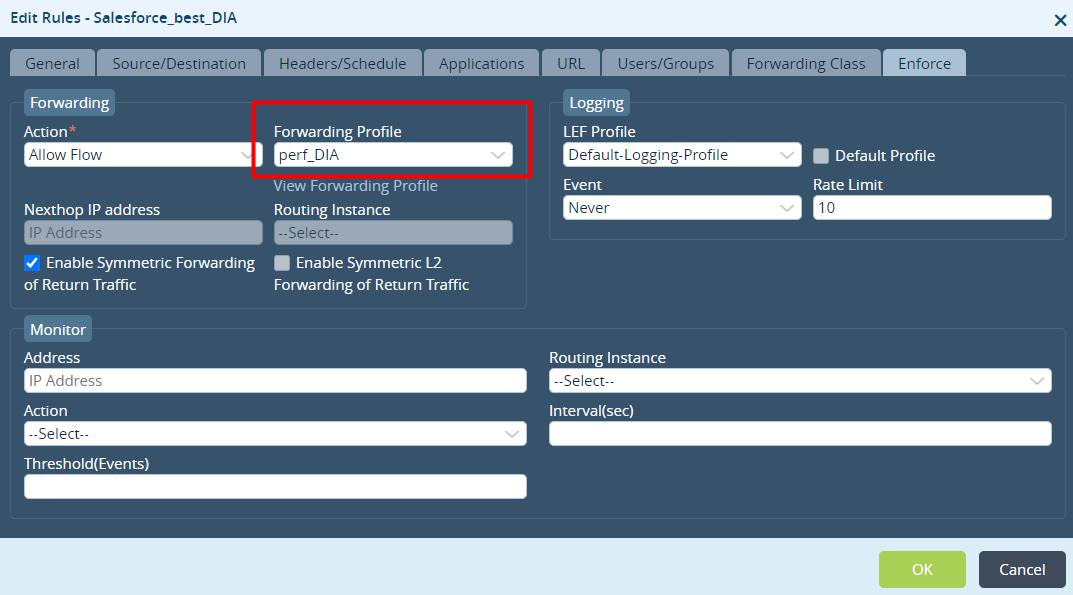

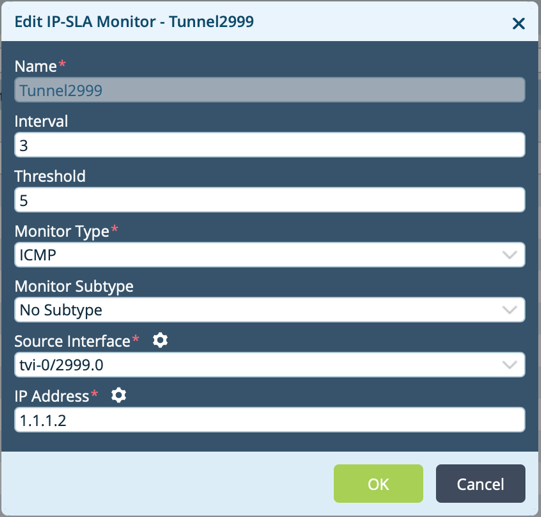

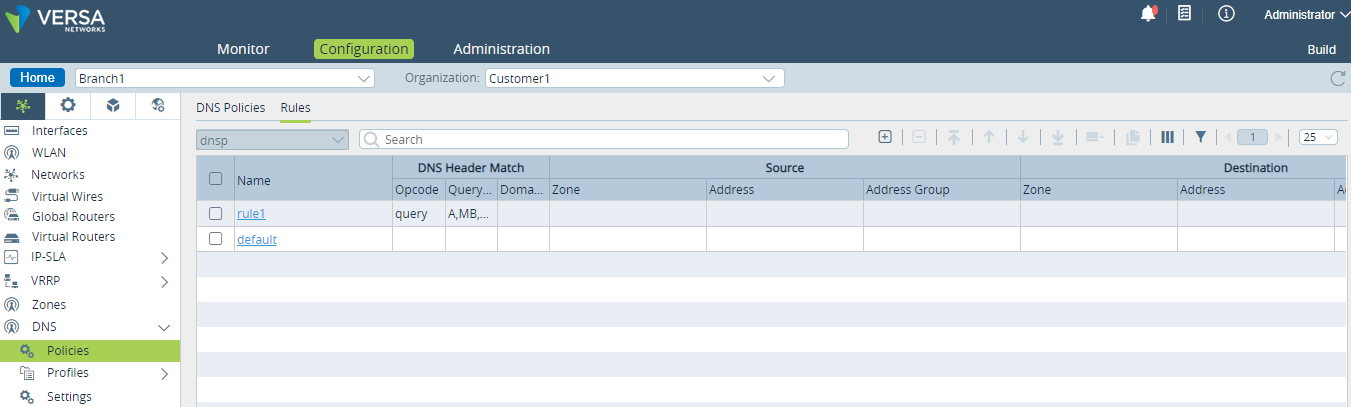

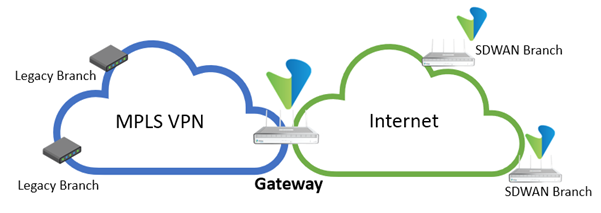

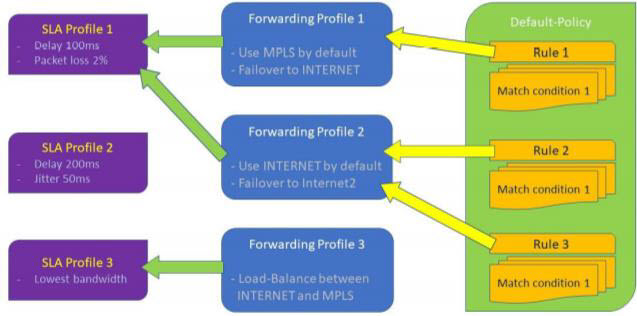

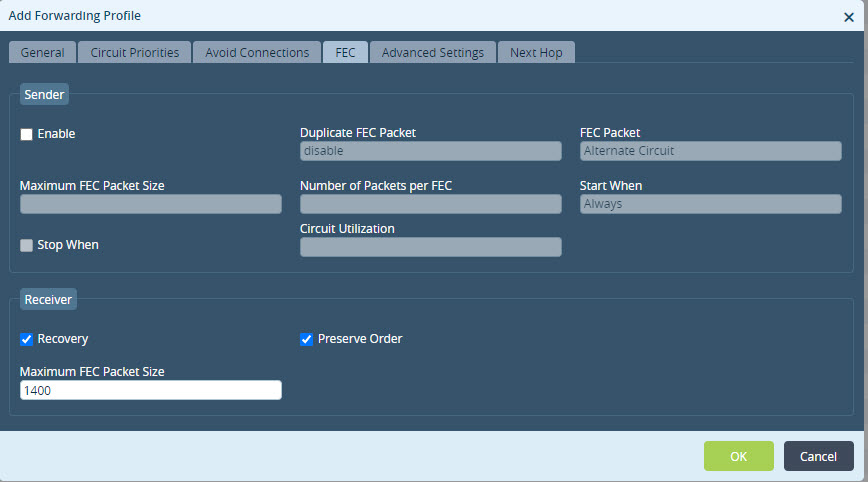

Controller-1 69888 fc_nc MPLS MPLS 1 1 disable disable 0 up 5 1d22h41m