Configure a Secondary Cluster for Log Collection

![]() For supported software information, click here.

For supported software information, click here.

Versa Operating SystemTM (VOSTM) log export functionality (LEF) exports Analytics logs from VOS device to remote systems. These systems can be either nodes in a Versa Analytics cluster or nodes in a third-party cluster. The cluster, normally in a primary data center, is called a primary cluster. For redundancy you can configure an additional cluster, called a secondary cluster, in either an active–backup or active–active mode with the primary cluster. For active–backup clusters, VOS devices send logs to the primary cluster and send logs to the secondary (backup) cluster only when the primary cluster goes down. For active–active clusters, VOS devices always send logs to both primary and secondary clusters.

This article describes the configuration process and outlines the procedures for configuring active–backup and active–active clusters. This article also gives an example for both the active–backup and active–active cluster configurations.

The discussion in this article assumes you are familiar with the following terms:

- Versa Analytics cluster, Analytics log collector node, and local collector

- Versa Application Delivery Controller (ADC), ADC service, and ADC service tuple

- LEF, LEF collector, LEF collector group, and LEF collector group list

For descriptions of these terms, see Versa Analytics Configuration Concepts.

For Releases 22.1.1 and later, when you are configuring workflow templates, you can identify Analytics clusters as active–backup or active–active pairs, called Analytics cluster groups. If you do this, you do not need to perform the configuration detailed in the procedures in the Configure Active–Backup Clusters and Configure Active–Active Clusters sections of this article, because they are automatically included in the templates for branch devices.

Active–Backup Clusters

For active–backup clusters, during normal operation, VOS devices send logs to the primary cluster. If the primary cluster goes down, VOS devices automatically send logs to the secondary cluster. This means that during normal operation, the secondary cluster receives no logs, but when the primary cluster is not available, it becomes active and starts receiving logs.

When the primary cluster fails, the application delivery controller (ADC) load balancer on the Controller nodes redirects the log connections from the primary cluster to the secondary cluster. When the primary data center comes back up, the ADC switches the log connections back to the primary cluster.

For Versa Analytics clusters, during the primary cluster failure, the secondary cluster can process logs into its Analytics datastores or it can just perform the log collection function. When the primary cluster comes back up, you can run scripts on the secondary cluster to copy archived logs to the primary cluster, and you can run scripts on the primary cluster to restore archived logs. For more information, see Manage Versa Analytics Log Archives. You can copy archived logs between clusters and restore the copied logs using the cluster synchronization tool.

The following figure shows a simplified topology to illustrate the components of active–backup clusters. The topology contains one branch VOS device with one organization, named Tenant1, one Controller node, and two Versa Analytics clusters. The Tenant1 organization has one collector, named Log Collector 1, which uses as its destination the ADC service tuple of an ADC service configured on the Controller node. The ADC service has a default pool containing the nodes in a primary Analytics cluster and a backup pool containing the nodes in a secondary Analytics cluster. Under normal operations, the ADC service maps log connections, called LEF connections, to nodes in the default pool. If all the nodes in the primary cluster fail, the ADC service maps LEF connections to the nodes in the backup pool instead. For simplicity, the figure does not show a second Controller node.

Configure Active–Backup Clusters

Before configuring active–backup clusters, you must perform initial configuration on the primary (active) and secondary (backup) clusters. For more information, see Set Up Analytics in Perform Initial Software Configuration.

To configure a primary and secondary cluster in active–backup mode, you do the following:

- Ensure that the primary and secondary clusters are configured to accept logs.

- Configure the ADC on Controller 1.

- Configure the ADC on Controller 2.

- Configure LEF profiles for organizations on VOS devices.

- Associate the LEF profiles with services or traffic-monitoring policy for the organizations on the VOS device.

To ensure that the primary and secondary clusters are configured to accept logs:

- If the primary or secondary cluster is a third-party cluster, configure it as recommended by the third-party vendor.

- If the primary cluster is a Versa Analytics cluster, no additional configuration is required after you perform the initial software configuration.

- If the secondary cluster is a Versa Analytics cluster, do one of the following to configure how the cluster handles log messages:

- To have the cluster process logs into datastores, ensure that the local collectors on the Analytics log collector nodes store incoming logs in the /var/tmp/log directory. This is the default directory that is set when you configure Analytics log collector nodes during initial software configuration. To display local collector information for a node in an Analytics cluster from the Director GUI:

1. In Director view, select the Analytics tab.

2. In the drop-down menu, select any node from the Analytics cluster.

3. Select Administration > Configurations > Log Collector Exporter in the left menu bar.

4. Select a cluster node in the Drivers hosts drop-down.

5. Select the Local Collector tab to display the local collectors configured on the cluster node. - To have the cluster only collect logs but not process them into datastores, configure the local collectors on the Analytics log collector nodes to store incoming logs in a directory that is not /var/tmp/log. The backup cluster then stores the logs but does not process them into datastores. For more information, see Modify or Add a Local Collector in Configure Log Collectors and Log Exporter Rules.

- To have the cluster process logs into datastores, ensure that the local collectors on the Analytics log collector nodes store incoming logs in the /var/tmp/log directory. This is the default directory that is set when you configure Analytics log collector nodes during initial software configuration. To display local collector information for a node in an Analytics cluster from the Director GUI:

To configure the ADC on Controller 1:

- Ensure that the ADC is enabled for the provider organization on the Controller node.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the Controller node on which you want to configure the ADC in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar. The following window displays.

- If the ADC menu item does not display in the left menu bar, the ADC is not enabled for the provider organization. To enable the ADC, see Enable an ADC in Configure an Application Delivery Controller.

- In Director view:

- Configure an ADC server for each node in the primary and secondary clusters.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the Controller node on which you want to configure the ADC in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar.

- Select ADC > Local Load Balancer > Server in the left menu bar.

- Click the + Add icon. The Add Server popup window displays. Enter information for the following fields.

Field Description Name Enter a name for the ADC server. Type Select the protocol to use for the connection between the ADC and the receiving node.

- Any

- TCP

- UDP

IP Address Enter the IP address of the receiving node. For Analytics log collector nodes, this is normally the southbound IP address. Port Enter the port number on which the receiving node listens for connections. Routing Instance Select the routing instance. - Click OK.

- Repeat Steps 2f and 2g for the remaining nodes in the primary and secondary clusters.

- In Director view:

- Configure an ADC server pool for the ADC servers in the primary cluster.

- Select ADC > Local Load Balancer > Server Pools in the left menu bar.

- Click the + Add icon. The Add Server Pool popup window displays. Enter information for the following fields.

Field Description Name Enter a name for the server pool. Type Select the protocol to use for the connection:

- Any

- TCP

- UDP

Member (Table) Select the servers to add to the ADC server pool. - Name

Click the drop-down menu, then select the ADC server.

Add icon

Add icon

Click the

Add icon to add the ADC server to the pool. The Member table displays the new member.

Add icon to add the ADC server to the pool. The Member table displays the new member. - Click OK.

- Repeat Steps 3a through 3c to configure ADC server pools for the ADC servers in the secondary cluster.

- Configure an ADC Service.

- Select Services > ADC > Local Load Balancer > Virtual Service in the left menu bar.

- Click the + Add icon. The Add Virtual Service popup window displays.

- Select the General tab and enter information for the following fields.

Field Description Name Enter a name for the ADC service. Type Select the protocol to use for the connection between the ADC service and the VOS devices:

- Any

- TCP

- UDP

IP Address Enter the IP address of the ADC service.

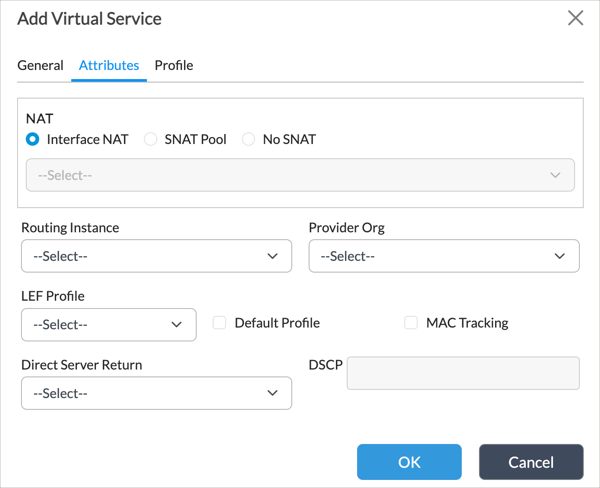

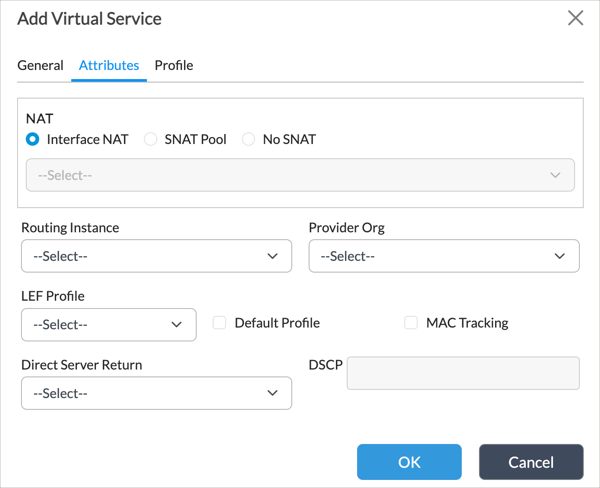

Port Enter the port number of the ADC service. Default Pool Select the default server pool to which to distribute new connections. Use the first server pool you configured in Step 3, which is the pool for the primary cluster. Backup Pool Select the backup server pool to which to distribute new connections when the default pool is unavailable. Use the server pool you configured in Step 4, which is the pool for the secondary cluster. Fallback to Active Click so that when the default pool comes back up, all the connections from the backup pool gradually switch back to the default pool. - Select the Attributes tab. In the Routing Instance field, select a routing instance.

- Click OK.

To configure the ADC on Controller 2, configure the ADC as described for Controller 1, but use a different ADC service tuple.

To configure a LEF profile for an organization on a VOS device:

- Configure a collector to send logs to the ADC service on Controller 1. Use the ADC service tuple of the ADC service you configured on Controller 1. For more information, see Configure a Collector in Configure Log Export Functionality.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the VOS device on which you want to configure the LEF profile in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Objects & Connectors > Connectors > Reporting > Logging Export Function in the left menu bar.

- Select the organization in the horizontal menu bar.

- Select the Collectors tab in the horizontal menu bar and then click the + Add icon. The Add Collector popup window displays. Enter information for the following fields.

Field Description Name Enter a name for the collector. Transport Select the protocol used by the ADC service tuple:

- Any

- TCP

- UDP

Destination (Group of Fields) - IP Address

Enter the IP address of the ADC service tuple. - Port

Enter the port of the ADC service tuple. Routing Instance Select the routing instance for the collector. This should match the routing instance of the ADC service. - Click OK.

- In Director view:

- Repeat Steps 1a through 1f to configure a second collector, but use the ADC service tuple of the ADC service you configured on Controller 2.

- Configure a collector group containing the collectors you configured in Steps 1 and 2. For more information, see Configure a Collector Group in Configure Log Export Functionality.

- Select the Collector Groups tab in the horizontal menu bar, then click the + Add icon. In the Add Collector Group popup window, enter information for the following fields.

Field Description Name Enter a name for the collector group. Collectors (Table) - + Add icon

Click the + Add icon, and then select a collector to add it to the collector group. - Click OK.

- Select the Collector Groups tab in the horizontal menu bar, then click the + Add icon. In the Add Collector Group popup window, enter information for the following fields.

- Configure a LEF profile. For more information, see Configure a Log Export Profile in Configure Log Export Functionality.

- Select the Profiles tab in the horizontal menu bar, then click the + Add icon. In the Add Profile popup window, enter information for the following fields.

Field Description Name Enter a name for the LEF profile. Collector Group Click, and then select the collector group you configured in Step 3. - Click OK.

- Select the Profiles tab in the horizontal menu bar, then click the + Add icon. In the Add Profile popup window, enter information for the following fields.

When configuring services or the traffic monitoring policy for the organization on the VOS device, associate the LEF profile with the service or traffic monitoring policy.

Example: Configure Active–Backup Analytics Clusters

The following example demonstrates how to configure Versa Analytics clusters in active–backup mode. The example configures two Controller nodes and a tenant on a branch VOS device to access two Analytics clusters.

This example has the following components, which are illustrated in the figure below:

- One branch VOS device, with one organization (tenant), named Tenant1.

- LEF profile named Default-Logging-Profile

- The LEF profile contains a collector group named Default-Collector-Group.

- The collector group contains two collectors named Collector-To-C1 and Collector-To-C2.

- The destination of Collector-To-C1 is the ADC service tuple of C1-ADC-Service.

- The destination of Collector-To-C2 is the ADC service tuple of C2-ADC-Service.

- ADC service named C1-ADC-Service on Controller 1.

- The ADC service uses both a default pool and a backup pool to map LEF connections.

- The default pool maps LEF connections to local collectors in the primary Analytics cluster.

- The backup pool maps to local collectors in the secondary Analytics cluster.

- The ADC service uses an ADC service tuple of IP address 10.0.0.0, protocol any, and port 1234.

- ADC service named C2-ADC-Service on Controller 2.

- The ADC service uses both a default pool and a backup pool to map LEF connections.

- The default pool maps LEF connections to local collectors in the primary Analytics cluster.

- The backup pool maps to local collectors in the secondary Analytics cluster.

- The ADC service uses an ADC service tuple of IP address 10.0.0.4, protocol any, and port 1234.

- Primary and secondary Analytics clusters.

- The primary cluster contains two Analytics log collector nodes. The first node is configured with a local collector at IP address 192.168.95.2 at TCP port 1234, and the second node is configured with a local collector at IP address 192.168.95.3 at TCP port 1234.

- The secondary cluster contains two Analytics log collector nodes. The first node is configured with a local collector at IP address 192.168.96.2 at TCP port 1234, and the second node is configured with a local collector at IP address 192.168.96.3 TCP port 1234.

To configure the primary and secondary clusters in active–backup mode, you configure two Controller nodes (Controller 1 and Controller 2) and a tenant (Tenant1) on the branch VOS device to access two Analytics clusters.

Configure Controller 1

- Configure the first ADC server:

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the Controller node on which you want to configure the ADC in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar.

- Select ADC > Local Load Balancer > Server in the left menu bar. The following screen displays.

- Click the + Add icon. In the Add Server popup window, enter or select the following values.

Field Value Name Primary-Node-1 Type TCP IP Address 192.168.95.2 Port 1234 Routing Instance provider-org-Control-VR - Click OK.

- In Director view:

- Configure three additional ADC servers by repeating Steps 1f and 1g three more times. Use the following values.

Field Value Name Primary-Node-2 - Type

TCP - IP Address

192.168.95.3 - Port

1234 Name Backup-Node-1 - Type

TCP - IP Address

192.168.96.2 - Port

1234 Name Backup-Node-2 - Type

TCP - IP Address

192.168.96.3 - Port

1234 - Configure an ADC server pool named Primary-Cluster-Pool.

- Select ADC > Local Load Balancer > Server Pools in the left menu bar.

- Click the + Add icon. The Add Server Pool window displays. Enter or select the following values.

Field Value Name Primary-Cluster-Pool Type TCP Member (Table of fields) - Name

Primary-Node-1  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool.- Name

Primary-Node-2  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool. - Click OK.

- Configure an ADC server pool named Backup-Cluster-Pool by repeating Steps 3a through 3c using the following values.

Field Value Name Backup-Cluster-Pool Type TCP Member (Table of fields) - Name

Backup-Node-1  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool.- Name

Backup-Node-2  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool. - Configure an ADC Service.

- Select ADC > Local Load Balancer > Virtual Service in the left menu bar.

- Click the + Add icon. The Add Virtual Service popup window displays. Select the General tab, and then enter the following values.

Field Value Name C1-ADC-Service Type Any IP Address 10.0.0.0 Port 1234 Default Pool Primary-Cluster-Pool Backup Pool Backup-Cluster-Pool - Click the Attributes tab. The following window displays. In the Routing Instance field, select provider-org-Control-VR.

- Click OK.

Configure Controller 2

- Perform Steps 1 through 4 in the procedure for configuring Controller 1. These steps configure the ADC on Controller 2 to have the identical ADC servers and ADC server pools as Controller 1.

- Configure an ADC service on Controller 2 by repeating Step 5 in the procedure for configuring Controller 1. In Step 5b, enter the following values.

Field Value Name C2-ADC-Service Type Any IP Address 10.0.0.4 Port 1234 Default Pool Primary-Cluster-Pool Backup Pool Backup-Cluster-Pool

Configure the VOS Branch Device

On the VOS device, configure collectors, a collector group, and an LEF profile for the Tenant1 organization:

- Configure the first of two collectors. This collector sends logs to the ADC service on Controller 1.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the VOS device on which you want to configure the LEF profile in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Objects & Connectors > Connectors > Reporting > Logging Export Function in the left menu bar.

- Select the provider or tenant organization in the Organization field in the horizontal menu bar.

- Select the Collectors tab in the horizontal menu bar, and then click the + Add icon. In the Add Collector popup window, enter the following values.

Field Value Name Collector-To-C1 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.0 - Port

1234 Routing Instance provider-org-Control-VR - Click OK.

- In Director view:

- Repeat Step 1a through 1f to configure the second of two collectors. This collector uses ADC service C2-ADC-Service on Controller 2 as its destination. Enter the following values in Step 1e.

Field Value Name Collector-To-C2 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.4 - Port

1234 Routing Instance provider-org-Control-VR - Configure a collector group.

- Select the Collector Groups tab in the horizontal menu bar.

- Click the + Add icon. In the Add Collector Group popup window, enter the following values.

Field Value Name Default-Collector-Group Collectors (Table of fields) - + Add icon

Collector-To-C1 - + Add icon

Collector-To-C2 - Click OK.

- Configure a LEF profile.

- Select the Profiles tab in the horizontal menu bar.

- Click the + Add icon to add a LEF profile. In the Add Profile popup window, enter the following values.

Field Value Name Default-Logging-Profile Collector Group Default-Collector-Group - Click OK.

Verify the Configuration

To verify the configuration of the Controller nodes and the VOS branch device for accessing the active–backup cluster, display the configuration from the CLI.

On Controller 1, check the configuration of the servers, server pools, and ADC service. Note that the servers and server pools configurations are identical for Controller 1 and Controller 2.

admin@Controller1$ cli

admin@Controller1> configure

admin@Controller1% show orgs org-services provider-org adc

lb {

servers {

Primary-Node-1 {

type any;

ip-address 192.168.95.2;

port 1234;

state enabled;

routing-instance provider-org-Control-VR;

}

Primary-Node-2 {

type any;

ip-address 192.168.95.3;

port 1234;

state enabled;

routing-instance provider-org-Control-VR;

}

Backup-Node-1 {

type any;

ip-address 192.168.96.2;

port 1234;

state enabled;

routing-instance provider-org-Control-VR;

}

Backup-Node-2 {

type any;

ip-address 192.168.96.3;

port 1234;

state enabled;

routing-instance provider-org-Control-VR;

}

}

server-pools {

Primary-Cluster-Pool {

type any;

member Primary-Node-1;

member Primary-Node-2;

}

Backup-Cluster-Pool {

type any;

member Backup-Node-1;

member Backup-Node-2;

}

}

virtual-services {

C1-ADC-Service {

type any;

address 10.0.0.0;

port 1234;

default-pool Primary-Cluster-Pool;

default-backup-pool Backup-Cluster-Pool;

fallback-to-active enabled

routing-instance provider-org-Control-VR;

}

}

}

On Controller 2, check the configuration of the ADC service:

virtual-services {

C2-ADC-Service {

type any;

address 10.0.0.4;

port 1234;

default-pool Primary-Cluster-Pool;

default-backup-pool Backup-Cluster-Pool;

fallback-to-active enabled

routing-instance provider-org-Control-VR;

}

}

On the VOS device, check the LEF profile configuration for Tenant1:

admin@Branch1$ cli

admin@Branch1> configure

admin@Branch1% show orgs org-service Tenant1 lef

collectors {

collector Collector-To-C1 {

destination-address 10.0.0.0;

destination-port 1234;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

collector Collector-To-C2 {

destination-address 10.0.0.4;

destination-port 1234;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

}

collector-groups {

collector-group Default-Collector-Group {

collectors [ Collector-To-C1 Collector-To-C2 ];

}

}

profiles {

Default-Logging-Profile {

collector-group Default-Collector-Group;

}

}

default-profile Default-Logging-Profile;

Active–Active Clusters

For Releases 21.2.1 and later.

For active–active clusters, during normal operation, VOS devices send logs to both the primary and secondary clusters. If one of the clusters goes down, the other cluster continues to receive and process logs.

To create active–active clusters, you configure LEF with group collector lists so that logs are sent to the two clusters at the same time. For the ADC on each Controller node, you configure two ADC services: one for the primary cluster and a second for the secondary cluster. The group collector list uses the two ADC services to send log to both clusters. Each cluster receives and processes logs and maintains an up-to-date copy of logs. During normal conditions, both clusters have the same data. If either cluster fails, the other cluster continues to operate.

For Versa Analytics clusters, you can still view Analytics data on the operational cluster from the Analytics tab in the Director GUI by selecting a node from the operational cluster. When the cluster that is down comes back up, it does not have any data for the failure period, and logs and datastores are not synchronized automatically between the clusters. To determine which log data is present on a cluster, you connect to each cluster and examine the logs. You can use the cluster synchronization tool to copy logs between clusters and restore the copied logs using the cluster synchronization tool.

The following illustration shows a simplified topology to illustrate the components of active–backup clusters. The topology contains one branch VOS device with one organization, named Tenant1. Tenant1 is configured with a collector group list that contains two collector groups, Collector Group 1 and Collector Group 2, and each collector group contains two collectors. Collector Group 1 forwards logs destined for the primary cluster to the first ADC service on the Controller node. Collector Group 2 forwards logs destined for the secondary cluster to the second ADC service. The collector group list sends logs to the active collector in each of the two collector groups, so both the primary and secondary Analytics clusters receive copies of logs for Tenant1 from the branch VOS device. For simplicity, the illustration does not show a second Controller node.

Configure Active–Active Clusters

For Releases 21.2.1 and later.

Before configuring active–active clusters, you must perform initial configuration on all clusters you are using in the configuration. For more information, see Set Up Analytics in Perform Initial Software Configuration.

To configure a primary and secondary cluster in active–active mode, you do the following:

- Ensure the primary and secondary clusters are configured to accept logs.

- Configure two ADC services on Controller 1.

- Configure two ADC services on Controller 2.

- Configure LEF profiles for organizations on VOS devices.

- Associate the LEF profiles with services or traffic monitoring policy for the organizations on the VOS device.

To ensure the primary and secondary clusters are configured to accept logs:

- If the primary or secondary cluster is a third-party cluster, configure as recommended by the third-party vendor.

- If the primary or secondary cluster is a Versa Analytics cluster, configure the cluster as described in Set Up Analytics in Perform Initial Software Configuration. Ensure that the driver hosts (Analytics log collector nodes) for the cluster are listed in the Director GUI; for more information, see Configure SMTP in Perform Initial Software Configuration. If you plan to use a non-default TCP port number (that is, not TCP port 1234) to connect from an ADC service to the nodes in an Analytics cluster, you must modify the local collectors on the nodes to use the non-default port. For more information, see Modify or Add a Local Collector in Configure Log Collectors and Log Exporter Rules.

To configure the two ADC services on Controller 1:

- Ensure that the ADC is enabled for the provider organization on the Controller node.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select Controller 1 in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar. The following screen displays.

- If the ADC menu item does not display in the left menu bar, the ADC is not enabled for the provider organization. To enable the ADC, see Enable an ADC in Configure an Application Delivery Controller.

- In Director view:

- Configure an ADC server for each node in the primary and secondary Analytics clusters. For more information, see Configure ADC Servers in Configure an Application Delivery Controller.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the Controller node on which you want to configure the ADC in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar.

- Select ADC > Local Load Balancer > Server in the left menu bar.

- Click the + Add icon. In the Add Server popup window, enter information for the following fields.

Field Description Name Enter a name for the ADC server. Type Select the protocol to use for the connection between the ADC and the receiving node.

- Any

- TCP

- UDP

IP Address Enter the IP address of the receiving node. For Analytics log collector nodes, this is normally the southbound IP address. Port Enter the port number on which the receiving node listens for connections. Routing Instance Select the routing instance. - Click OK.

- Repeat Steps 2f and 2g for the remaining nodes in the primary and secondary clusters.

- In Director view:

- Configure an ADC server pool for the ADC servers in the primary cluster.

- Select ADC > Local Load Balancer > Server Pools in the left menu bar.

- Click the + Add icon. In the Add Server Pool popup window, enter information for the following fields.

Field Description Name Enter a name for the server pool. Type Select the protocol to use for the connection:

- Any

- TCP

- UDP

Member (Table) Select the servers to add to the ADC server pool. - Name

Select the ADC server.  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool. The Member table displays the new member. To add ADC servers to the Members table, in the Name field, select the ADC server, and then click the

Add icon to add the ADC server to the pool. The Member table displays the new member. To add ADC servers to the Members table, in the Name field, select the ADC server, and then click the  Add icon.

Add icon.

- Click OK.

- Repeat Steps 3a through 3c to configure an ADC server pool for the nodes in the secondary cluster.

- Configure an ADC service to map connections to the primary cluster. Use the pool you configured in Step 3 as the default pool for this service. When configuring an ADC service for use by multiple tenant organizations, you normally configure the service with a routing instance from the provider organization. For more information, see Configure ADC Virtual Services in Configure an Application Delivery Controller.

- Select Services > ADC > Local Load Balancer > Virtual Service in the left menu bar.

- Click the + Add icon. The Add Virtual Service window displays. Select the General tab and then enter information for the following fields.

Field Description Name Enter a name for the ADC service. Type Select the protocol to use for the connection between the ADC service and the VOS devices:

- Any

- TCP

- UDP

IP Address Enter the IP address of the ADC service.

Port Enter the port number of the ADC service. Default Pool Select the default server pool to which to distribute new connections. Fallback to Active Click so that when the default pool comes back up, all the connections from the backup pool gradually switch back to the default pool. - Select the Attributes tab. The following window displays. In the Routing Instance field, select a provider organization routing instance.

- Click OK.

- Repeat Steps 5a through 5d to configure a second ADC service that maps connections to the secondary cluster. In Step 5b, use the server pool you configured in Step 4 as the default pool and use a unique ADC service tuple.

To configure the ADC on Controller 2, configure two ADC services as described for Controller 1. Make sure that you use different ADC service tuples.

To configure a LEF profile for an organization on a VOS device:

- Configure the first of four collectors with destinations corresponding to the four ADC services you configured in the previous steps. In Step 1e, below, the IP Address, Transport, and Port field values must match the ADC service tuple for the first ADC service you configured on Controller 1—the service that maps connections to the primary cluster over Controller 1.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select a VOS device in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Objects & Connectors > Connectors > Reporting > Logging Export Function in the left menu bar.

- Select the organization from the Organization field in the horizontal menu bar.

- Select the Collectors tab from the horizontal menu bar and then click the + Add icon. In the Add Collector popup window, enter information for the following fields.

Field Description Name Enter a name for the collector. Transport Select the protocol used by the ADC service tuple:

- Any

- TCP

- UDP

Destination (Group of Fields) - IP Address

Enter the IP address of the ADC service tuple. - Port

Enter the port of the ADC service tuple Routing Instance Select the routing instance for the collector. This should match the routing instance of the ADC service. - Click OK.

- In Director view:

- Configure the second of four collectors by repeating Steps 1a through 1f. In Step 1e, use the ADC service tuple of the first ADC service you configured on Controller 2. This is the ADC service that maps connections to the primary cluster over Controller 2.

- Configure the third of four collectors by repeating Steps 1a through 1f. In Step 1e, use the ADC service tuple of the second ADC service you configured on Controller 1. This is the ADC service that maps connections to the secondary cluster over Controller 1.

- Configure the fourth of four collectors by repeating Steps 1a through 1f. In Step 1e, use the ADC service tuple of the second ADC service you configured on Controller 2. This is the ADC service that maps connections to the secondary cluster over Controller 2.

- Configure the first of two collector groups. This collector group contains the collectors you configured in Steps 1 and 2—the collectors sending logs to the primary cluster. For more information, see Configure a Collector Group in Configure Log Export Functionality.

- Select the Collector Group tab in the horizontal menu bar.

- Click the + Add icon. In the Add Collector Group popup window, enter information for the following fields.

Field Description Name Enter a name for the collector group. Collectors (Table) - + Add icon

Click the + Add icon, and then select a collector to add it to the collector group. - Click OK.

- Configure the second of two collector groups by repeating Steps 5a through 5c. This collector group contains the collectors you configured in Steps 3 and 4—the collectors sending logs to the secondary Analytics cluster.

- Configure a LEF profile and collector group list. You define the collector group list within the LEF profile configuration. For more information, see Configure a Collector Group List and Configure a Log Export Profile in Configure Log Export Functionality.

You configure a LEF profile and collector group list from the CLI by issuing the following command.

set orgs org-services organization-name lef profiles logging-profile-name collector-group-list collector-group-1 [collector-group-2]

When configuring services or the traffic monitoring policy for the organization on the VOS device, associate the LEF profile with the service or traffic monitoring policy.

Example: Configure Active–Active Analytics Clusters

The following example demonstrates how to configure Versa analytics clusters in active–active mode. The example configures two Controller nodes and a sample tenant on a branch VOS device to access two Analytics clusters in active–active mode.

This example has the following components, which are illustrated in the figure below:

- One branch VOS device, with one organization (tenant), named Tenant1.

- LEF profile named Default-Logging-Profile.

- The LEF profile contains a collector group named Default-Collector-Group.

- The collector group contains two collectors named Primary-Collector-Group and Secondary-Collector-Group.

- Primary-Collector-Group contains two collectors named To-Primary-Over-C1 and To-Primary-Over-C2.

- Secondary-Collector-Group contains two collectors named To-Secondary-Over-C1 and To-Secondary-Over-C2.

- The destination of To-Primary-Over-C1 is the ADC service tuple of C1-Primary-Service.

- The destination of To-Secondary-Over-C1 is the ADC service tuple of C1-Secondary-Service.

- The destination of To-Primary-Over-C2 is the ADC service tuple of C2-Primary-Service.

- The destination of To-Secondary-Over-C2 is the ADC service tuple of C2-Secondary-Service.

- Two ADC services named C1-Primary-Service and C1-Secondary-Service on Controller 1.

- Configures two ADC services on Controller 1.The first ADC service maps connections to the primary cluster, and the second ADC service maps connections to the secondary cluster. The first ADC service uses ADC service tuple 10.0.0.0 Any port 1235, and the second uses ADC service tuple 10.0.0.0 Any port 1236.

- Two ADC services named C2-Primary-Service and C2-Secondary-Service on Controller 2.

- Configures two ADC services on Controller 2. These ADC services also map connections to the primary and secondary clusters, but the first uses ADC service tuple 10.0.0.4 Any port 1235 and the second uses ADC service tuple 10.0.0.4 Any port 1236.

- Primary Analytics cluster.

- The primary cluster contains two Analytics log collector nodes with IP addresses 192.168.95.2 and 192.168.95.3.

- The first node contains a local collector at 192.168.95.2 TCP port 1235.

- The second node contains a local collector at 192.168.95.3 TCP port 1235.

- Secondary Analytics cluster.

- The secondary cluster contains two Analytics log collector nodes with IP addresses 192.168.96.2 and 192.168.96.3.

- The first node contains a local collector at 192.168.96.2 TCP port 1236.

- The second node contains a local collector at 192.168.96.3 TCP port 1236

To configure the primary and secondary clusters in active–active mode, you modify local collectors on the two clusters, configure two Controller nodes (Controller 1 and Controller 2), and configure a tenant (Tenant1) on the branch VOS device.

Modify Local Collectors

- Modify the local collector on the first Analytics node in the primary cluster.

- In Director view, select the Analytics tab in the top menu bar.

- For Releases 22.1.1 and later, hover over the Analytics tab and then select a connector to any node in the Analytics cluster containing the node.

- For Releases 21.2 and earlier, in the horizontal menu bar, select a connector to any node in the Analytics cluster containing the node.

- Select Administration > Configurations > Log Collector Exporter in the left menu bar.

- In the Driver Hosts field, select the Analytics node that contains the local collector.

- Select the Local Collector tab in the main pane.

- Click the name of the local collector. In the window that displays, modify the existing values in the Address and Port fields so they match those from the table below.

Field Value Address 192.168.95.2 Port 1235 - Click Save.

- In Director view, select the Analytics tab in the top menu bar.

- Repeat Steps 1a through 1g to modify the collector on the second Analytics node in the primary cluster using the following values.

Field Value Address 192.168.95.3 Port 1235 - Repeat Step 1a through 1g to modify the collector on the first Analytics node in the secondary cluster using the following values.

Field Value Address 192.168.96.2 Port 1236 - Repeat Step 1a through 1g to modify the collector on the second Analytics node in the secondary cluster using the following values.

Field Value Address 192.168.96.3 Port 1236

Configure Controller 1

- Configure the first ADC server:

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the Controller node on which you want to configure the ADC in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services in the left menu bar.

- Select the provider organization in the Organization field in the horizontal menu bar.

- Select ADC > Local Load Balancer > Server in the left menu bar.

- Click the + Add icon. In the Add Server popup window, enter or select the following values.

Field Value Name Primary-Node-1 Type TCP IP Address 192.168.95.2 Port 1235 Routing Instance provider-org-Control-VR - Click OK.

- In Director view:

- Configure three additional ADC servers by repeating Steps 1f and 1g three more times. Use the following values.

Field Value Name Primary-Node-2 - Type

TCP - IP Address

192.168.95.3 - Port

1235 Name Secondary-Node-1 - Type

TCP - IP Address

192.168.96.2 - Port

1236 Name Secondary-Node-2 - Type

TCP - IP Address

192.168.96.3 - Port

1236 - Configure an ADC server pool named Primary-Cluster-Pool.

- Select ADC > Local Load Balancer > Server Pools in the left menu bar.

- Click the

Add icon. The Add Server Pool window displays. Enter or select the following values.

Add icon. The Add Server Pool window displays. Enter or select the following values.

Field Value Name Primary-Cluster-Pool Type TCP Member (Table) - Name

Primary-Node-1  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool.- Name

Primary-Node-2  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool. - Click OK.

- Configure an ADC server pool named Secondary-Cluster-Pool by repeating Steps 3a through 3c using the following values.

Field Value Name Secondary-Cluster-Pool Type TCP Member (Table) - Name

Secondary-Node-1  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool.- Name

Secondary-Node-2  Add icon

Add icon

Click the  Add icon to add the ADC server to the pool.

Add icon to add the ADC server to the pool. - Configure an ADC Service for the primary cluster.

- Select ADC > Local Load Balancer > Virtual Service in the left menu bar.

- Click the

Add icon. The Add Virtual Service window displays. Select the General tab, and then enter the following values.

Add icon. The Add Virtual Service window displays. Select the General tab, and then enter the following values.

Field Value Name C1-Primary-Service Type Any IP Address 10.0.0.0 Port 1235 Default Pool Primary-Cluster-Pool - Click the Attributes tab. The following window displays. In the Routing Instance field, select routing instance provider-org-Control-VR.

- Click OK.

- Configure the ADC Service for the secondary cluster by repeating Steps 5a through 5c. Use the following values in Step 5b.

Field Value Name C1-Secondary-Service Type Any IP Address 10.0.0.0 Port 1236 Default Pool Secondary-Cluster-Pool

Configure Controller 2

- Perform Steps 1 through 4 in the procedure for configuring Controller 1. These steps configure the ADC on Controller 2 to have identical ADC servers and ADC server pools as Controller 1.

- Configure the first of two ADC services on Controller 2 by repeating Step 5 in the procedure for configuring Controller 1. In Step 5b, enter the following values.

Field Value Name C2-Primary-Service Type Any IP Address 10.0.0.4 Port 1235 Default Pool Primary-Cluster-Pool - Configure the second of two ADC services on Controller 2 by repeating Step 5 in the procedure for configuring Controller 1. In Step 5b, enter the following values.

Field Value Name C2-Secondary-Service Type Any IP Address 10.0.0.4 Port 1236 Default Pool Secondary-Cluster-Pool

Configure the VOS Branch Device

On the VOS device, configure four collectors, a collector group, and an LEF profile for the Tenant1 organization:

- Configure the first of four collectors. This collector uses the ADC service C1-Primary-Service on Controller 1.

- In Director view:

- Select the Administration tab in the top menu bar.

- Select Appliances in the left menu bar.

- Select the VOS device on which you want to configure the LEF profile in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- In the left menu bar, select Objects & Connectors > Connectors > Reporting > Logging Export Function.

- In the Organization drop-down menu, select the provider or tenant organization.

- Select the Collectors tab in the horizontal menu bar, and then click the + Add icon. The Add Collector window displays. Enter the following values.

Field Value Name To-Primary-Over-C1 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.0 - Port

1235 Routing Instance provider-org-Control-VR - Click OK.

- In Director view:

- Configure the second of four collectors. This collector uses ADC service C2-Primary-Service on Controller 2 as its destination. Enter the following values in Step 1e.

Field Value Name To-Primary-Over-C2 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.4 - Port

1235 Routing Instance provider-org-Control-VR - Configure the third of four collectors. This collector uses ADC service C1-Secondary-Service on Controller 1 as its destination. Enter the following values in Step 1e.

Field Value Name To-Secondary-Over-C1 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.0 - Port

1236 Routing Instance provider-org-Control-VR

- Configure the fourth of four collectors. This collector uses the ADC service C2-Secondary-Service on Controller 2 as its destination. Enter the following values in Step 1e.

Field Value Name To-Secondary-Over-C2 Transport TCP Destination (Group of Fields) - IP Address

10.0.0.4 - Port

1236 Routing Instance provider-org-Control-VR - Configure the first of two collector groups. This collector group forwards logs to the primary cluster.

- Select the Collector Groups tab in the horizontal menu bar.

- Click the + Add icon. In the Add Collector Group popup, enter or select the following values.

Field Value Name Primary-Collector-Group Collectors (Table) - + Add icon

To-Primary-Over-C1 - + Add icon

To-Primary-Over-C2 - Click OK.

- Configure the second of two collector groups by repeating Step 5. This collector group forwards log to the secondary cluster. In Step 5b, enter the following values.

Field Value Name Secondary-Collector-Group Collectors (Table) - + Add icon

To-Secondary-Over-C1 - + Add icon

To-Secondary-Over-C2 - Configure a LEF profile named Default-Logging-Profile and a collector group list. You define the collector group list within the LEF profile configuration.

- Access the shell on the VOS device. See Access the CLI on a VOS Device.

- Issue the following commands:

admin@Branch1$ cli admin@Branch1> configure admin@Branch1% set orgs org-services Tenant1 lef profiles \ > Default-Logging-Profile collector-group-list Primary-Collector-Group Secondary-Collector-Group admin@Branch1% commit

Verify the Configuration

To verify the configuration of the Controller nodes and the VOS branch device for accessing the active–active cluster, display the configuration from the CLI.

On Controller 1, check the configuration of the servers, server pools, and ADC services. Note that the server and server pool configuration is identical for Controller 1 and Controller 2.

admin@Controller1$ cli

admin@Controller1> configure

admin@Controller1% show orgs org-services provider-org adc

lb {

servers {

Primary-Node-1 {

type any;

ip-address 192.168.95.2;

port 1235;

state enabled;

routing-instance provider-org-Control-VR;

}

Primary-Node-2 {

type any;

ip-address 192.168.95.3;

port 1235;

state enabled;

routing-instance provider-org-Control-VR;

}

Secondary-Node-1 {

type any;

ip-address 192.168.96.2;

port 1236;

state enabled;

routing-instance provider-org-Control-VR;

}

Secondary-Node-2 {

type any;

ip-address 192.168.96.3;

port 1236;

state enabled;

routing-instance provider-org-Control-VR;

}

}

server-pools {

Primary-Cluster-Pool {

type any;

member Primary-Node-1;

member Primary-Node-2;

}

Secondary-Cluster-Pool {

type any;

member Secondary-Node-1;

member Secondary-Node-2;

}

}

virtual-services {

C1-Primary-Service {

type any;

address 10.0.0.0;

port 1235;

default-pool Primary-Cluster-Pool;

routing-instance provider-org-Control-VR;

}

C1-Secondary-Service {

type any;

address 10.0.0.0;

port 1236;

default-pool Secondary-Cluster-Pool;

routing-instance provider-org-Control-VR;

}

}

}

On Controller 2, check the configuration of the ADC service:

virtual-services {

C2-Primary-Service {

type any;

address 10.0.0.4;

port 1235;

default-pool Primary-Cluster-Pool;

routing-instance provider-org-Control-VR;

}

C2-Secondary-Service {

type any;

address 10.0.0.4;

port 1236;

default-pool Secondary-Cluster-Pool;

routing-instance provider-org-Control-VR;

}

}

On the VOS device, check the LEF profile configuration for Tenant1:

admin@Branch1$ cli

admin@Branch1> configure

admin@Branch1% show orgs org-services Tenant1 lef

collectors {

collector To-Primary-Over-C1 {

destination-address 10.0.0.0;

destination-port 1235;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

collector To-Primary-Over-C2 {

destination-address 10.0.0.4;

destination-port 1235;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

collector To-Secondary-Over-C1 {

destination-address 10.0.0.0;

destination-port 1236;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

collector To-Secondary-Over-C2 {

destination-address 10.0.0.4;

destination-port 1236;

routing-instance provider-org-Control-VR;

transport TCP;

template Default-LEF-Template;

}

}

collector-groups {

collector-group Primary-Collector-Group {

collectors [ To-Primary-Over-C1 To-Primary-Over-C2 ];

}

collector-group Secondary-Collector-Group {

collectors [ To-Secondary-Over-C1 To-Secondary-Over-C2 ];

}

}

profiles {

profile Default-Logging-Profile {

collector-group-list [ Primary-Collector-Group Secondary-Collector-Group ];

}

}

default-profile Default-Logging-Profile;

Analytics Cluster Groups

For Releases 22.1.1 and later.

You can configure workflows to automatically generate Controller and branch templates that are compatible with active–active and active–backup Analytics clusters. In the workflow for Controller nodes, you configure an Analytics cluster group, which consists of the primary and secondary Analytics clusters. Next, when you are configuring organization workflows, you select the Analytics cluster group. When you commit the templates to the Controller and branch devices, the appropriate ADC services and LEF profiles are automatically configured on the devices.

Note that ADC pools and ADC servers for the ADC services are not included in the templates generated by the workflow. You must configure them as described earlier in this article.

When you commit templates generated from these workflows, the following items are configured:

- On Controller nodes

- Active–backup configuration (as described in Active–Backup Clusters, above):

- One ADC service is configured on each of two Controller nodes.

- Active–active configuration (as described in Active–Active Clusters, above):

- Two ADC services are configured on each of two Controller nodes.

- Active–backup configuration (as described in Active–Backup Clusters, above):

- On VOS branch devices

- Active–backup configuration (as described in Active–Backup Clusters, above):

- Two LEF collectors, whose destination is the appropriate ADC service on the Controller nodes, are configured.

- One LEF collector group that is associated with the two LEF collectors is configured.

- A default LEF profile that is associated with the LEF collector group is configured.

- Active–active configuration (as described in Active–Active Clusters, above):

- Four LEF collectors, whose destination is the appropriate ADC service on the Controller nodes, are configured.

- Two LEF collector groups, the first group associated with the first two collectors and the second group associated with the last two collectors, are configured.

- A default LEF profile that includes a collector group list for the two collector groups is configured.

- Active–backup configuration (as described in Active–Backup Clusters, above):

Configure Analytics Cluster Groups

For Releases 22.1.1 and later.

You configure a Analytics cluster groups in a Controller workflow and then select a cluster group when you configure an organization workflow. You can also select an Analytics cluster group in a post-staging template.

Before configuring an Analytics cluster group, you must configure an Analytics connector to each Analytics cluster. For more information, see Configure an Analytics Connector in Perform Initial Software Configuration.

To configure an Analytics cluster group:

- In Director view, select the Workflows tab in the top menu bar.

- Select Infrastructure > Controllers in the horizontal menu bar.

- Select a Controller node in the main pane. The following screen displays.

- Click Next. The Configure Analytics Cluster screen displays.

- Click Analytics Group to activate the Analytics Group drop-down menu.

- Click the drop-down menu, and then select + Add New. The Add Analytics Group popup window displays. Enter information for the following fields.

Field Description Group Name Enter a name for the Analytics cluster group. Redundancy Mode This field is automatically filled in based on the entries in the Analytics Cluster table. The default is Active–Active. If you add a secondary (backup) cluster, the value in this field changes to Active–Standby. Analytics Cluster (Table) Add Analytics clusters to the Analytics cluster group by selecting a type and cluster name.

- Type

Select a cluster type:

- Primary—For active–active configurations, mark all clusters as Primary.

- Secondary—For active–backup clusters, mark the backup cluster as Secondary.

- Cluster Name

Select the name of a cluster. Only clusters with configured connectors display in the list.  Add icon

Add icon

Click to add the Analytics cluster to the Analytics cluster group. - Click OK to temporarily save the Analytics cluster definition and return to the Configure Analytics Cluster screen.

- Click Skip to Review.

- Click Save to permanently save the Analytics cluster definition.

To associate an Analytics cluster group with an organization workflow:

- In Director view, select the Workflows tab in the top menu bar.

- Select Infrastructure > Organizations in the horizontal menu bar.

- Select an organization in the main pane. The following screen displays.

- Click Next four times. The Configure Analytics Cluster screen displays.

- In the Available column, select an Analytics cluster group. The Analytics cluster group transfers to the Selected column.

- Click Skip to Review.

- Click Save.

To associate an Analytics cluster group with a post-staging template:

- In Director view, select the Workflows tab in the top menu bar.

- Select Template > Templates in the horizontal menu bar. The following screen displays.

- Select a post-staging template in the main pane. The following screen displays.

- In the Analytics Cluster field, select an Analytics cluster group.

- Click Skip to Review.

- Click Save.

Supported Software Information

Releases 20.2 and later support all content described in this article, except:

- For Releases 20.2.3 and later, you can run the cluster synchronization tool.

- For Releases 21.2.1 and later, you can configure primary and secondary clusters in active–active mode.

- For Releases 22.1.1 and later, you can configure Analytics cluster groups.