Versa Analytics Configuration Concepts

![]() For supported software information, click here.

For supported software information, click here.

Versa Analytics is an analytics platform that provides visibility into Versa Operating SystemTM (VOSTM) devices. VOS devices send data in the form of log messages (simply called logs) to a set of nodes, called an Analytics cluster, dedicated to processing analytics data. The Analytics cluster analyzes this data to create near real-time graphs and tables that display on the Versa Director node and to create reports about usage patterns, trends, security events, and alerts. You can print the reports, tables, and graphs and export them to your local system. You can use the reports and dashboards to perform trending, correlation, and prediction about the VOS devices and network performance.

This article provides an overview of the concepts you need to understand in order to configure VOS devices, Versa Controller nodes, Analytics clusters, and Versa Director nodes to enable Versa Analytics functionality.

Analytics Data Flow

It is useful to know how logs and Analytics data flow through the Versa components mentioned above. VOS devices generate log and send them to a node in an Analytics cluster, with a Versa Controller node acting as an intermediary between the VOS device and the Analytics node. The node in the cluster receiving the logs extracts the data from the logs and inserts the data into datastores within the Analytics cluster. The Director node contacts the Analytics cluster, which returns analyzed log data to populate the dashboards and to generate reports displayed in the Director GUI. For more information on this process, see Versa Director Nodes and Analytics Clusters.

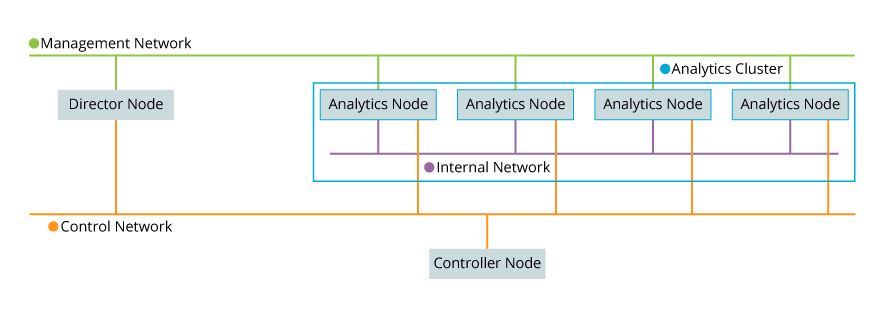

The following figure illustrates the Analytics data flow using a simplified topology consisting of a branch VOS device, a Versa Controller node, four Analytics nodes in an Analytics cluster, and a Versa Director node.

Analytics Clusters

During initial installation and software configuration, you configure one or more Analytics nodes and place them into an Analytics cluster. Nodes are assigned one of three personalities: analytics, search, or forwarder, which are referred to as analytics-type, search-type, and forwarder-type Analytics nodes. Also during initial installation, you connect the interfaces of each Analytics node to three networks: management, control, and internal. This section describes the networks that the Analytics nodes connect to and the functions performed by the nodes in an Analytics cluster. For information about configuring Analytics clusters, see Set Up Analytics in Perform Initial Software Configuration.

For Releases 22.1.1 and later, you can configure multiple Analytics clusters and then combine their information for Analytics reports using an Analytics aggregator node. For information about aggregator nodes, see Configure Analytics Aggregator Nodes.

Analytics Network Connections

Analytics nodes typically use separate interfaces to connect to management, control, and internal networks.

The following figure shows a sample topology containing an Analytics cluster with four Analytics nodes connected to three networks.

- Management network—The northbound interface on each Analytics node typically connects to the management network. A Versa Director node uses this network to communicate with Analytics nodes when, for example, it displays an Analytics graph or creates a report. The IP address of the interface, usually interface eth0, on the Analytics node connecting to this network, is called the RPC address during initial configuration.

- Control network—The southbound interface on each Analytics node typically connects to the control network. This network is used to send logs from Controller nodes to Analytics nodes. The IP address of the interface, usually interface eth1, connecting to this network is called the collector address during initial configuration.

- Internal network—This network is internal to the Analytics cluster. It is used by cluster nodes for high-speed information exchange, for example, to synchronize databases within the cluster. The IP address of the interface, usually interface eth2, connecting to this network is called the listen address during initial configuration.

If your topology includes log forwarder nodes, connect the nodes to the control network. The log forwarder nodes receive logs from Controller nodes and process and forward the logs to analytics-type and search-type nodes, which are described in the next section. The following example shows a sample Analytics cluster topology with 8 nodes. 4 nodes are log forwarder nodes.

Analytics Cluster Node Types

An Analytics cluster comprises Analytics nodes that run different combinations of software components. These combinations come in three types: analytics, search, and forwarder.

- Search-type Analytics nodes—Search-type Analytics nodes store flow logs, event data, and packet captures and are used when searching logs in the Versa Director GUI. These nodes run the search platform and contain a datastore. It is recommended that you configure at least two search-type Analytics nodes within a cluster for high availability. Search-type Analytics nodes use the internal network to communicate with other nodes in the cluster. You can configure search-type Analytics nodes to perform log collection.

Initial software configuration: set node personality to search in the clustersetup.conf file; see Perform Initial Software Configuration.

- Analytics-type Analytics nodes—Analytics-type Analytics nodes store historical data and are used when creating many of the reports and graphs in the Versa Director GUI. These nodes run a noSQL database. You normally configure two analytics-type Analytics node within a cluster for high availability. Analytics-type Analytics nodes use the internal network to communicate with other nodes in the cluster. You can configure analytics-type Analytics nodes as Analytics log collector nodes.

Initial software configuration: set node personality to analytics in the clustersetup.conf file.

- Forwarder-type Analytics nodes—Forwarder-type Analytics nodes do not run the noSQL database or search engine and should be configured as Analytics log collector nodes. These nodes accept incoming connections containing logs from VOS devices, called log export functionality (LEF) connections. Forwarder-type Analytics nodes are useful when an Analytics cluster has a large number of incoming LEF connections. You can send all LEF connections to forwarder-type Analytics nodes and have these nodes process all incoming logs for an Analytics cluster. You can alternately configure some VOS devices to send logs to the forwarder-type Analytics nodes and other VOS devices to send logs to the analytics-type and search-type nodes. Both options help distribute incoming LEF connections over multiple Analytics nodes within the cluster and avoid overwhelming the search-type and analytics-type Analytics nodes. During initial software configuration, you configure these nodes with personality analytics in the clustersetup.conf file.

Initial software configuration: set node personality to forwarder in the clustersetup.conf file.

- Analytics log collector nodes—Analytics nodes that run the log collector exporter program to receive logs from VOS devices are referred to as Analytics log collector nodes. Any of the search-type, analytics-type, forwarder-type, or lightweight-type nodes can be configured as Analytics log collector nodes.

Initial software configuration: for any nodes with personality search, analytics, or forwarder, assign values to the collector_address and collector_port fields in the clustersetup.conf file.

- Lightweight-type Analytics nodes—Lightweight-type Analytics nodes accept incoming connections containing logs from VOS devices and stream the logs to third-party collectors. They do not perform database operations and do not forward logs to other Analytics nodes in the cluster.

- Aggregator-type Analytics nodes—(For Releases 22.1.1 and later.) To provide a consolidated view for tenant-level reports, you can configure an Analytics aggregator node, which can consolidate and aggregate data from multiple standard Analytics clusters. Aggregator nodes generate reports by pulling data individually from standard Analytics clusters, called child clusters. For more information, see Configure Analytics Aggregator Nodes. Note that in Versa Concerto, you can create an aggregator cluster; see Add an Aggregator Cluster in Install Concerto.

The following table summarizes the components of various Analytics node types. The log collector exporter program and Versa Analytics driver are described later in this document.

| Node Type | noSQL Database | Search Engine | Log Collector Exporter | Versa Analytics Driver |

|---|---|---|---|---|

| Analytics | Yes | No | Optional | Optional |

| Search | No | Yes | Optional | Optional |

| Forwarder | No | No | Yes | Yes |

| Lightweight | No | No | Yes | No |

| Aggregator | No | No | No | No |

See Log Collector Exporter for a description of the log collector exporter and Versa Analytics Driver for a description of the Versa Analytics driver program.

Search-type and analytics-type Analytics nodes are sometimes collectively referred to as datastore nodes.

Disk Storage on Analytics Clusters

Analytics clusters can required a large amount of disk storage for analytics-type and search-type nodes. This space is required for database compaction for analytics-type nodes and log surges for search-type nodes. The compaction process occurs when records are deleted from the database. Counterintuitively, deleting database records increases storage for a temporary period, which can last up to several days. Log surges occur when there is a temporary increase in log-producing activity on VOS devices.

To accommodate compaction on analytics-type nodes, the total disk on these nodes should be at least twice that of actual database storage. If disk usage reaches 50%, disk cleanup should be performed or more storage added.

To decrease disk usage for Analytics clusters, you can:

- Redirect heavy flow for certain logs types from VOS devices to the Versa Advanced Logging Service (ALS). See Configure the Versa Advanced Logging Service

- (For Releases 22.1.1 and later.) Distribute logs among multiple, independent Analytics clusters, and then use an aggregator node to recombine data for reports. See Configure Analytics Aggregator Nodes

- Apply strategies described in the article Versa Analytics Scaling Recommendations.

For information about monitoring Analytics resources, including disk usage, see Monitor Analytics Clusters. For information about increasing existing disk storage, see Expand Disk Storage on Analytics Nodes.

Versa Director Nodes and Analytics Clusters

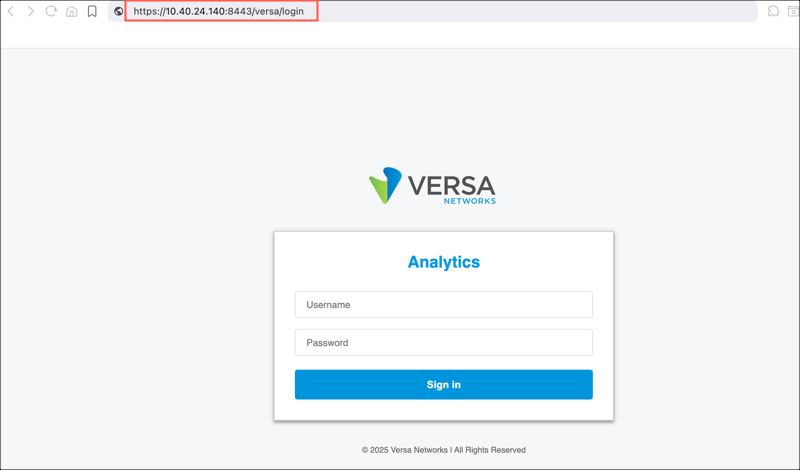

During initial software configuration, you create a connector to each Analytics cluster from the Director node. The Director node uses the connector to communicate with a program called the Analytics application. This application, a copy of which runs on each Analytics node, is accessible through port 8443. You typically access this application using the following methods:

- Analytics tab in Versa Director—When you select the Analytics tab from the GUI running on the Director node, you are accessing the Analytics application.

- API calls to the Analytics node—You can use API calls to interact with the Analytics application.

- Directly from a browser window—You can access the Analytics application through port 8443 in a browser, for example https://10.40.24.140:8443 where 10.40.24.140 is the IP address of an Analytics node. When accessed using this method, the application displays its own GUI. This GUI provides the same functionality as that displayed by the Analytics tab in Director.

In the Analytics application, you identify which nodes in the Analytics cluster are analytics-type or search-type and which nodes are Analytics log collector nodes. Analytics log collector nodes are called driver hosts in the Analytics application. The Analytics application uses the node type and driver host information to access the Analytics datastores and when configuring driver hosts. After you have created the connector, identified the nodes types and driver hosts, and completed initial software configuration for the headend, you can continue configuring Analytics from the Director node GUI. For information about creating a connector, see Configure an Analytics Connector in Perform Initial Software Configuration. For information about specifying analytics-type, search-type, and driver host node IP addresses, see Configure Node Types in the Analytics Application in Perform Initial Software Configuration.

The Administration > Connectors > Analytics Cluster screen displays the existing cluster connectors. In the following example, the Director node can connect to Analytics cluster Corp-Inline-Cluster-1 using either node Analytics1 at IP address 10.40.24.140 or Analytics2 at IP address 10.40.24.141.

Analytics nodes display in the drop-down list in the Analytics tab. You can select any node within a cluster to access the cluster. If the selected node becomes unavailable, you can select a different node to access the cluster. To interact with a different Analytics cluster, select a node from that cluster. In the examples below, the Corp-Inline-Cluster-1 cluster is configured with the Analytics nodes from the previous example, and these nodes display in the drop-down list on Analytics tab screens.

For Release 22.1.1 and later, hover over the Analytics tab and then select a node.

For Releases 21.2 and earlier, select a node from the drop-down list in the horizontal menu bar.

Some parameters of Analytics clusters, such as database (datastore) retention time, you configure for the Analytics cluster as a whole. Other parameters, specifically those of driver hosts (Analytics log collector nodes), you configure for a specific node within the cluster. Screens that configure driver hosts contain a drop-down list allowing you to choose a specific node. Analytics nodes are included in this menu if they are listed on the Analytics > Administration > Configurations > Settings > Main Settings screen; see Configure Node Types in the Analytics Application in Perform Initial Software Configuration.

The following example shows the Log Archives screen, which allows you to manage log archives on a driver host. The driver host at 192.168.1.21 is selected, and this screen displays information about log archives on node 192.168.1.21 and allows you to delete, restore, and view details about archives on that node. In this example, IP address 192.168.1.21 is the southbound network interface for the Analytics node at 10.40.24.141.

You can run the Analytics application directly from a browser using port 8443 on an Analytics node. In the following example, the user accesses port 8443 for the Analytics node at IP address 10.40.24.140, and the Analytics application displays a login screen.

You log in using your account name and password. The functionality of this GUI is the same as that of the Analytics tab in the Director GUI. During initial software configuration, you set the password for the default Analytics application account, admin, in the ui_password field in the clustersetup.conf file; see Perform Initial Software Configuration.

Log Processing

During initial software configuration, you configure some or all of the analytics-type, search-type, and forwarder-type Analytics nodes to process incoming logs. Any node that processes incoming logs is referred to as an Analytics log collector node, regardless of its node type. You can choose to configure only forwarder-type Analytics nodes as Analytics log collector nodes so that the resources on the other node types can be dedicated to data storage and processing.

Any Analytics node configured with at least one local collector is an Analytics log collector node. Local collectors listen for incoming TCP connections at a given IP address and port. This port is where the Analytics node receives incoming LEF connections from VOS devices. To configure a node as an Analytics log collector node during initial software configuration, you assign values to the collector_address and collector_port variables for that node in the clustersetup.conf configuration file. Then, you run the van_cluster_installer.py cluster deployment script. The van_cluster_install.py script configures a local collector for each node that lists a collector_address value and collector_port value in the clustersetup.conf file. Note that the van_cluster_install.py script does not configure the Analytics log collector nodes as driver hosts in Versa Director. You cannot administer an Analytics log collector node from the Director GUI until you add the node to the driver host list. For information on driver hosts, see Versa Director Nodes and Analytics Clusters.

All Analytics log collector nodes run two programs to accept and process logs: the log collector exporter and the Versa Analytics driver. This section discusses the function and configuration of these two programs. This section also discusses the archival of processed logs.

Log Collector Exporter

The log collector exporter program accepts incoming connections from VOS devices and reads the incoming logs from those connections. It removes the IPFIX overhead from the logs and stores them in a log storage directory on the Analytics log collector node on which the log was received. You can also configure the log collector exporter to forward a copy of the logs, in syslog format, to a remote system.

For the log collector exporter to accept and store incoming logs and export them to a remote system, you configure the following:

- Local collector—A local collector consists of a unique combination of an IP address of an interface on that node and TCP port number. A local collector also includes the name of a log storage directory. Adding a local collector configures the log collector exporter to listen for incoming logs at the given TCP port at the specified IP address, and then to store the logs in the given log storage directory. If the log collector exporter stores the logs in the directory /var/tmp/log, the Versa Analytics driver processes the logs. For information about the Versa Analytics driver, see Versa Analytics Driver.

For example, the following Director screen displays values for a local collector named collector1. This local collector configuration has the log collector exporter listen at TCP port 1234 at IP address 192.168.95.2 and store logs received in the local /var/tmp/log directory. 192.168.95.2 is the IP address of the network interface that connects to the control network.

- Remote collector—You can configure the log collector exporter to send a copy of logs, in syslog format, to another system. To do so, you create a remote collector. A remote collector destination is a system configured to accept logs in syslog format. A remote collector can stream logs on tcp/udp/tls transport. You use a remote collector, for example, when streaming logs to a Kafka cluster.

- Remote collector group—You can configure the log collector exporter to treat a set of remote collectors as a group. You export logs to a remote collector group instead of an individual remote collector. The log collector exporter sends logs to the first reachable remote collector destination in the remote collector group, starting with an indicated primary remote collector.

- Remote collector group list—You can configure the log collector exporter to export logs to multiple remote collector groups. You do this by creating a remote collector group list. The log collector exporter streams logs to the active remote collector of each remote collector group in the list.

- Log exporter rules—To send logs to a remote collector, you create log exporter rules. These rules associate a local collector to a single remote collector, remote collector group, or remote collector group list, so that the local collector automatically streams incoming logs to the associated remote system or systems.

For information about configuring local collectors, remote collectors, remote collector groups, remote collector group lists, and exporter rules, see Configure Log Collectors and Log Exporter Rules.

Versa Analytics Driver

Any logs copied to the /var/tmp/log directory on an Analytics log collector node are processed by the Versa Analytics driver on that node. The driver performs extract, transform, and load (ETL) operations on the logs. Depending on the type of the logs, the driver sends log data to the analytics-type or search-type nodes where it gets inserted into the Analytics datastores, so that it is available to be used by web services. After the Versa Analytic driver has processed a log, it moves the log to a backup subdirectory.

The Versa Analytics driver processes only logs copied to /var/tmp/log. If you configure a local collector to store logs in a directory other than /var/tmp/log, the logs are not processed. This can be useful when you are required to collect logs but do not need log analysis.

After the Versa Analytics driver inserts the processed data as entries into the datastores within the cluster, the datastores store the data for a configurable period of time, called the retention time. When the retention time has expired, the data is removed from the datastore. You control the amount of data stored in the datastores by adjusting the retention time for specific types of entries. To reprocess data after it has been deleted from the datastores, you can restore logs from the archive into the /var/tmp/log directory. For information on configuring retention time, see Analytics Datastore Limits in Versa Analytics Scaling Recommendations.

Log Archival

Local collectors configured on Analytics log collector nodes store logs in a local log storage directory. The default configuration sets up the Linux cron utility to automatically archive logs in the /var/tmp/log backup subdirectories to the /var/tmp/archive directory. If you configure a local collector to store logs in a non-standard directory, one other than /var/tmp/log, the logs are not automatically archived. If required, you can create an additional cron job to archive the logs contained in the non-standard directory. Each Analytics log collector node contains its own log storage directories, log archive directories, and cron jobs. You configure log archival separately on each node. You can NFS-mount any of the directories containing archives to automatically store the archives on a remote system. For more information on managing log archives, see Manage Versa Analytics Log Archives.

VOS Devices and Log Export Functionality

You can configure log export functionality (LEF) on VOS devices so that they can send logs to other systems, including Analytics nodes, syslog servers, and third-party Netflow collectors. The logs can be exported in an IPFIX template for Analytics nodes and Netflow collectors, or a syslog template for syslog servers. To export logs, you configure a LEF profile and then associate it with features and services. For information about configuring LEF profiles, see Configure Log Export Functionality. For information about associating LEF profiles with features and services, see Apply Log Export Functionality.

LEF Collectors

When you configure LEF, you define collectors for each provider and each tenant organization on a VOS device. The collector defines where to send the logs for an organization, specifying a destination IP address and a TCP or UDP port number. You can configure the collector destination to be one of the following:

- An ADC service tuple (IP address, protocol, and port number) used by an application delivery controller (ADC) on a Versa Controller node

- Third-party system that is configured as a Netflow collector

- Third-part system that is configured as a syslog server

- Local collector configured on an Analytics node

Application Delivery Controllers

On each VOS device, LEF establishes a TCP or UDP connection between the VOS device and the destination system to which it is transferring logs. There is a separate LEF connection for each LEF collector. If a branch VOS device supports many organizations, a large number of LEF connections may be created. If the destination for these LEF connections is a single Analytics node or a limited number of Analytics nodes, the result might be a bottleneck at the Analytics node, because the Analytics node performs many functions in addition to log processing, and the log processing might slow down. To avoid this bottleneck, you can configure an intermediary on a Versa Controller node called an application delivery controller (ADC) service. The ADC service defines an IP address, protocol (TCP or UDP), and port number combination, called an ADC service tuple. The ADC service listens for LEF connections on the ADC service tuple. The ADC then maps the connections, using a load-balancing algorithm, to an Analytics node or nodes or a 3rd-party system. When you use this intermediary, you configure the ADC service as the destination of the LEF connections and the logs.

The following example shows the LEF collectors for three organizations (Tenant1, Tenant2, and Tenant3) on a branch VOS device. Each of the three LEF collectors uses an ADC service tuple of 10.10.10.1 TCP port 1234, as its destination. The LEF collectors form LEF connections to the ADC service listening at this ADC service tuple, and then the ADC service maps the LEF connections to specific log collector nodes in the Analytics cluster.

The ADC service on the Controller node includes an ADC server for each Analytics log collector, and an ADC server monitor to check the UP or DOWN state of the log collector nodes. The monitor can use either the ICMP or TCP protocol to monitor the nodes.

If you configure an ADC monitor to use TCP, it creates a temporary connection to the local collector connection pool. By default, the monitor uses the IP address and port number of its associated ADC server to create these connections, and the local collector is set to accept a maximum of 512 connections. To avoid exceeding the maximum, it is recommended that you configure an additional (dummy) local collector using an alternate port number on each log collector node. Then, use the alternate port number when configuring the monitor. For information about adding a local collector, see Modify or Add a Local Collector in Configure Log Collectors and Log Exporter Rules. For information about configuring an ADC, see Configure an Application Delivery Controller.

The following diagram illustrates an ADC service with two ADC server monitors. ADC Monitor 1 is associated with ADC Server 1 and probes the dummy collector configured at 192.45.13.1, port 1235 on Log Collector Node 1. A second ADC monitor probes the health of Log Collector Node 2 using 192.45.13.2, port 1235.

For information about configuring an ADC, see Configure an Application Delivery Controller.

Advanced Logging Service

You can forward Analytics logs to the Versa advanced logging service (ALS) from an ADC service on a controller. For more information, see Configure the Versa Advanced Logging Service.

Collector Groups

You can configure LEF to treat a set of collectors as a group. Collector groups are configured per organization. The organization can be a tenant organization or a provider organization. When using a collector group, LEF still sends logs to a single destination, but it has multiple destinations to choose from. LEF checks the reachability of the destination for the first log collector in the collector group. If reachable, the VOS device sends the log to that destination. If not reachable, the VOS device checks the reachability of the next log collector's destination. It tries each log collector destination for the group in turn to determine where to send the log.

You can use collector groups to create redundancy in your topology. The example below shows three organizations (Tenant1, Tenant2, and Tenant3), each with two log collectors and a collector group. The first log collector for each collector group uses the ADC service tuple of 10.10.10.1 TCP port 1234 on Controller 1 as its destination, The second uses an ADC service tuple of 10.10.10.2 TCP port 1234 on Controller 2 as its destination. If one of the Controller nodes fails, the VOS device can continue sending logs using the other Controller node.

Collector Group Lists

For Releases 21.2.1 and later.

You can configure LEF to send logs to multiple log collector destinations. You do this by placing two or more collector groups in a collector group list. Collector group lists are defined as part of a LEF profile configuration. Logs are sent to the active collector of each of the collector groups in the list.

LEF Profiles

After configuring LEF collectors and LEF collector groups, you associate them with a LEF profile. A LEF profile configuration includes a LEF template indicating whether to export logs in IPFIX or syslog format and the names of LEF collectors and LEF collector groups. You can define a collector group list as part of the LEF profile configuration and you can assign one LEF profile to be the default for an organization. To apply log export functionality to a service or feature on a VOS device, you explicitly associate a LEF profile in the service or feature configuration except those features and services that automatically send logs to the default LEF profile. To send logs for specific types of traffic, such as specific applications, you associate the LEF profile with a traffic monitoring policy. The VOS device sends logs for the feature or service to the active collector of the LEF profile. For more information about configuring logging for specific features and services, see Apply Log Export Functionality.

Secondary Analytics Clusters

In addition to configuring a primary Analytics cluster, you can configure a secondary Analytics cluster acting either in active-backup or active-active mode with the primary Analytics cluster. In active-active mode, both clusters contain a current copy of logs and datastores, and you can switch to the secondary cluster at any time. For active-backup mode, you can switch to the secondary (backup) cluster only when the primary cluster goes down. You can configure the backup cluster to process logs into its datastore nodes or to only perform the log collection function.

For active-backup mode, you configure a primary and secondary (backup) cluster, and you configure an ADC service with a primary and a backup pool of log collector nodes. The default pool contains the nodes in the primary cluster and the backup pool contains the nodes in the backup cluster, and you configure the ADC service to map LEF connections to the backup cluster when all nodes in the primary cluster become unreachable. When the primary cluster becomes reachable again, the ADC service maps the connections back to the primary cluster.

The following diagram shows a simplified topology that has one branch VOS device with one organization, named Tenant1. The organization has one collector called log collector 1. Log collector 1 uses the ADC service tuple of an ADC service configured on Controller 1 as its destination. The ADC service on Controller 1 has a default pool containing the nodes in the primary Analytics cluster and a backup pool containing the nodes in the secondary Analytics cluster. The ADC service maps LEF connections to nodes in the default pool under normal operations. If all the nodes in the primary cluster fail, the ADC service maps LEF connections to the nodes in the secondary Analytics cluster instead.

.png?revision=1)

For active-active mode for Releases 21.2.1 and later, you configure a primary and secondary cluster, and you configure LEF to send logs to multiple clusters at the same time using a group collector list so that the logs are always synchronized on both clusters and each cluster can process the logs and maintain up-to-date copies of their datastores. Active-active mode does not use ADC backup pools.

The following diagram shows a simplified topology that has one branch VOS device with one organization, Tenant1. Tenant1 is configured with a collector group list. The group list contains two collector groups: collector group 1 and collector group 2. Each collector group contains 2 collectors. The collectors in collector group 1 uses the ADC service tuple of 10.10.10.10.1 TCP port 1234 as its destination. The first log collector in collector group 2 uses an ADC service tuple of 10.10.10.1 TCP port 1235 as its destination. The ADC service listening at ADC service tuple 10.10.10.1 TCP port 1234 maps LEF connections to the primary Analytics cluster. A second ADC service, listening at 10.10.10.1 TCP port 1235, maps LEF connections to the secondary Analytics cluster. Because collector group lists send logs to the active collector in each of their collector groups, both the primary and secondary Analytics clusters receive copies of logs from Tenant1. For simplicity, the configuration for the second log collector in each group is not shown. You normally configure the second log collector in each collector group to send logs to a second Controller configured in a manner similar to the Controller displayed in the diagram.

.png?revision=1)

For active-active mode for releases prior to Release 21.2.1, LEF for an organization can send logs to only one cluster at a time. In this case, you configure an ADC service as described for active-backup mode. The ADC service sends logs to the primary cluster during normal operations. If the clusters are Versa Analytics clusters, you can use cron scripts to copy logs from the primary Analytics cluster to the /var/tmp/log directory on the Analytics log collector nodes in secondary cluster. The Versa Analytics driver on the secondary cluster automatically processes the logs into the datastores on the secondary cluster.

For both active-active and active-backup configurations using Versa Analytics clusters, you can select an Analytics node from a specific cluster in the Director GUI to view the Analytics information and administrate the nodes on that cluster. For more information about configuring a secondary cluster see Configure a Secondary Cluster for Log Collection.

Supported Software Information

Releases 20.2 and later support all content described in this article, except:

- Release 21.2.1 adds support for collector group lists.

- Release 22.1.1 adds support for Analytics aggregator nodes.

Additional Information

Apply Log Export Functionality

Configure a Secondary Cluster for Log Collection

Configure an Application Delivery Controller

Configure Analytics Aggregator Nodes

Configure Log Collectors and Log Exporter Rules

Configure Log Export Functionality

Configure the Versa Advanced Logging Service

Expand Disk Storage on Analytics Nodes

Manage Versa Analytic Log Archives

Perform Initial Software Configuration

SD-WAN Headend Design Guidelines

Versa Analytics Scaling Recommendations