Configure TCP Optimizations

![]() For supported software information, click here.

For supported software information, click here.

TCP optimizations mitigate the effects of high latency and packet loss on the performance of TCP-based applications. The optimizations are based on a TCP proxy architecture, in which one or more Versa Operating SystemTM (VOSTM) devices in a network path between a client and server split the TCP connection into two. One connection faces the client and the other one faces the server, and the VOS devices act as TCP proxies for each of the split network segments. The optimizations can be done in dual-ended mode, in which the TCP connection is split between two peer VOS devices in the network path that discover each other, or in single-ended mode, in which one or more VOS devices can independently have single-ended TCP optimization configured without being aware of or coordinating with the other VOS devices in the network path.

TCP Optimization Overview

TCP is a connection-oriented protocol with traffic management capabilities that focus on reliably delivering traffic to its destination. However, a number of factors can negatively impact the performance of TCP connections, including:

- Latency—Latency is the delay between the time that a device sends TCP packets and the time that it receives an acknowledgment that the packets were received. Latency increases as network distances increase. When latency is high, the sending device spends more time idling, which reduces throughput and translates to a poor application experience.

- TCP slow-start—When a device begins sending TCP traffic, TCP slow-start probes network bandwidth and gradually increases the amount of data transmitted until it finds the network's maximum carrying capacity. On a high-latency path, it can take time to determine the maximum carrying capacity and to react to changes in bandwidth. TCP optimization reduces the time to reach the maximum available bandwidth.

- Last-mile network problems—Last-mile networks can suffer from a number of problems, such as random packet loss and buffer bloat. For example, an LTE network may experience random packet loss because of poor signal coverage or access-point handovers. In addition, the large shaping buffers that are often present in last-mile networks can fill up, causing correspondingly large end-to-end latencies.

- Packet reordering—Packet reordering can cause a TCP sender to mistakenly detect packet loss and retransmit data needlessly.

- Burst losses—Shallow buffers and packet-transmission strategies on wireless networks can cause burst losses, in which the idle time between bursts of traffic can be misinterpreted as signs of congestion, causing unnecessary TCP backoffs.

- End-host TCP limitations—TCP performance is affected by limitations in the TCP implementation on the client and server. For example, the client or server may not enable the selective acknowledgment (SACK), window scaling, and timestamp options; or, the client or server may limit the size of TCP send and receive buffers to a value that is insufficient to accommodate the bandwidth delay product (BDP) of a high-latency network.

The TCP optimizations mitigate the effects of these problems. Because the optimizations are performed at the transport level, they work even if the end-to-end connection is encrypted.

The following TCP optimization features are available:

- Latency splitting—TCP proxies are enabled at one or more points in the path between the client and server, splitting the end-to-end latency into smaller segments. Each segment performs loss recovery independently, significantly improving both slow-start convergence and loss recovery times.

- Congestion control—TCP optimization implements bottleneck bandwidth and round-trip (BBR) propagation time, a congestion-control algorithm based on the published IETF specification. BBR measures both the largest amount of recent bandwidth available to a connection and the connection's smallest recent round-trip delay. BBR then uses these metrics to control how fast it sends data and how much& data it allows to be sent at any given time.

- Loss detection and recovery—Versa software implements the recent acknowledgment (RACK) loss-detection algorithm and the supplemental tail loss probe (TLP) algorithm based on the published IETF specification. RACK and TLP help solve the problems caused by lost retransmissions, tail drops, and packet reordering, which are problems that standard TCP loss-detection mechanisms do not mitigate well.

- High-performance TCP options—In environments where performance is limited by the end hosts instead of the network (for example, the end hosts have small buffers or do not support SACK, window scaling, timestamp options, an so forth), Versa software enables these options on their behalf.

- HTTP/HTTPS proxy—Versa HTTP/HTTPS proxy can leverage TCP optimizations to further improve performance in certain use cases, such as selective local internet breakout for applications normally accessed through a proxy and SSL decryption.

TCP Optimization Modes

You can implement TCP optimization in one of the following modes, depending on the kind of traffic being transmitted:

- Auto mode—Auto mode is the simplest way to configure TCP optimization. You use auto mode when there are two or more VOS devices in the network (dual-ended mode). Auto mode can detect which VOS device is closest to the client and which is closest to the server (peer discovery). It then splits the connection between these two devices. You must configure auto mode on all VOS devices in the network path so they can participate in peer discovery. The VOS devices on the WAN side of a TCP segment automatically enable, on the end hosts, any options that are needed for better performance but that are not advertised by the end hosts. Auto mode is useful when when the traffic is sometimes upload-heavy and sometimes download-heavy.

- Splice mode—Splice mode locally splits the TCP connection after an application or URL category is identified or when the VOS device receives the first data packet after the three-way handshake completes. Similar to peer discovery, the three-way handshake completes end to end. However, if the end hosts did not advertise high-performance TCP options, such as SACK or timestamps, these options cannot be enabled during the split. This mode is useful when you want to enable only single-sided optimization, but cannot do a proxy or peer discovery because not enough information is available in the first synchronization (SYN) packet to match the correct rule. Splice mode is mainly useful for optimizing traffic that is destined to a web proxy at a hub location. On the hub, because all application traffic is destined to the web proxy address, the SYN packet alone is not sufficient to determine the application and match the correct rule.

- Proxy mode—In proxy mode, you configure a single VOS device to be a proxy for the TCP connection instead of doing end-to-end peer discovery. The first SYN packet does not travel end to end, but is terminated locally by the VOS device. Proxy mode is similar to splice mode, but you cannot use proxy mode on a hub when the proxy is located behind the hub.

- Forward proxy—Forward proxy optimizes data being sent from clients to servers. You configure it on the VOS device closer to the client.

- Reverse proxy—Reverse proxy optimizes data being sent from servers to clients. You configure it on the VOS device closer to the server.

Note that TCP optimizations are mainly optimizations on the transmission side: they improve performance for bulk data transfer across high-latency and lossy WANs. In these cases, auto mode is often the simplest and best configuration, because a TCP proxy is created close to the client, to optimize uploads, and a TCP proxy is also created close to the server, to optimize downloads. However, there may be cases in which the overhead of two TCP proxies in the path offsets the benefit of TCP optimization. In these cases, and especially if you understand the nature of the application well, you can use one of the single-ended TCP optimization modes (forward/reverse proxy or splice mode). For example, if you know that a particular application is always download-heavy, it may be sufficient to configure a reverse proxy close to the server. On the other hand, if it is clear that the application is always upload-heavy, it may be sufficient to configure a forward proxy close to the client.

Even though most of the optimizations are on the transmission side, some of them also help the data receiver. For example, consider a client behind a poor last-mile link accessing an application across a high-latency network. Even if the application only downloads large amounts of data from the server, the throughput may be limited by one of the following:

- The client uses only a small receive buffer, and so the throughput is limited to the buffer size bytes per round-trip time.

- The client does not support the SACK option, which means that loss recovery is inefficient.

You can address both these problems by using a forward proxy, which can advertise the SACK option to the server and supports a much larger receive buffer.

Configure TCP Optimizations

To configure the TCP optimization features, you do the following:

- Configure TCP profiles

- Configure TCP optimization policies

Configure TCP Profiles

In a TCP profile, you configure settings that apply to a proxy or a split connection.

To configure a TCP profile:

- In Director view:

- Select the Configuration tab in the top menu bar.

- Select Templates > Device templates in the horizontal menu bar.

- Select an organization in the left menu bar.

- Select a post-staging template in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Objects & Connectors > Objects > TCP Profile in the left menu bar. The main pane displays a table of TCP profiles.

- Click the

Add icon. In the TCP Profile screen, enter information for the following fields.

Add icon. In the TCP Profile screen, enter information for the following fields.

Field Description Name (Required) Enter a name for the TCP profile. Description Enter a description for the TCP profile. Maximum TCP Send Buffer Enter the maximum size of the TCP send buffer. Setting the buffer size limits TCP memory consumption.

Range: 64 through 16777216 bytes (16 MB)

Default: 4096 bytes (4 KB)Maximum TCP Receive Buffer Enter the maximum size of the TCP receive buffer. Setting the buffer size limits TCP memory consumption.

Range: 64 through 16777216 bytes (16 MB)

Default: 4096 bytes (4 KB)TCP Congestion Control Select the congestion control algorithm to use:

- New Reno congestion control algorithm—An algorithm that responds to partial acknowledgments.

- Cubic congestion control algorithm—An algorithm that uses a cubic function instead of a linear window increase function to improve scalability and stability for fast and long-distance networks. This is the default algorithm.

- BBR congestion control algorithm—Bottleneck bandwidth and round-trip propagation time (BBR) measures both the largest amount of recent bandwidth available to a connection and the connection's smallest recent round-trip delay. BBR then uses these metrics to control how fast it sends data and how much data it allows to be sent at any given time.

Default: Cubic congestion control algorithm

TCP Loss Detection Select the method of TCP loss detection to use:

- Duplicate ACKs—Loss detection based on duplicate ACKs. This is the default method.

- Recent acknowledgment (RACK)

Default: Duplicate ACKs

TCP Loss Recovery Select the method of TCP loss detection to use:

TCP Hybrid Slow Start Click to enable TCP hybrid slow start. By default, hybrid slow start is disabled. Rate Pacing Click to enable rate pacing. Rate pacing injects packets smoothly into the network, thereby avoiding transmission bursts, which could lead to packet loss. By default, rate pacing is disabled.

- Click OK.

Configure TCP Optimization Policies

TCP optimization policies use standard policy-match language to determine which sessions to optimize and how to optimize them.

To configure a TCP optimization policy:

- In Director view:

- Select the Configuration tab in the top menu bar.

- Select Templates > Device templates in the horizontal menu bar.

- Select an organization in the left menu bar.

- Select a post-staging template in the main pane. The view changes to Appliance view.

- Select the Configuration tab in the top menu bar.

- Select Services > SD-WAN > Policies in the left menu bar. The main pane displays a table of policies.

- Select Rules in the horizontal menu bar.

- Click the

Add icon. In the TCP Profile screen, click the Enforce tab and enter information for the following fields.

Add icon. In the TCP Profile screen, click the Enforce tab and enter information for the following fields.

Field Description TCP Optimization (Group of Fields) - Bypass Latency Threshold

Enter how much latency must be measured before TCP optimizations begin.

Range: 0 through 60000 milliseconds

Default: 10 milliseconds

- Mode

Select a TCP optimization mode:

- Auto

- Bypass—Disable TCP optimizations.

- Forward proxy

- Proxy

- Reverse proxy

- Splice

- LAN Profile

Select a LAN profile. For proxy mode, you must configure a TCP profile.

If you do not select TCP profiles, a system default LAN profile is applied that uses the cubic congestion control algorithm and duplicate ACK loss detection.

- WAN Profile

Select a WAN profile.

For proxy mode, you must configure a TCP profile.

If you do not select TCP profiles, a system default WAN profile is applied that uses the BBR congestion control algorithm and RACK loss detection.

- Click OK.

Use Case Scenarios

This section describes examples of how to use TCP proxies in different scenarios.

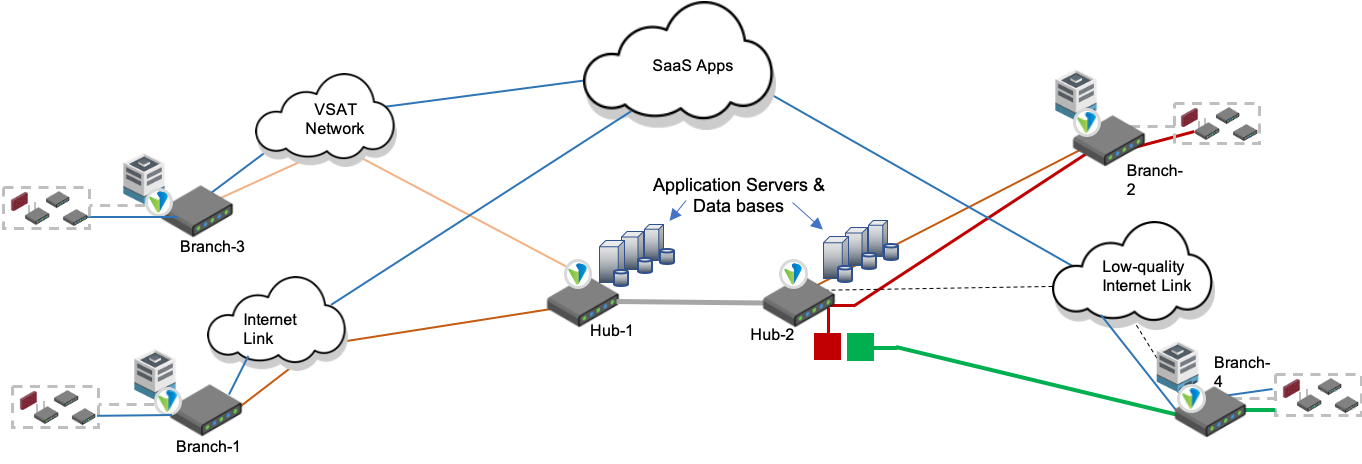

Scenario 1: Client Traversing Two Hubs To Access an Application

In this scenario, an application behind Branch-1 is communicating with an application behind Branch-2. The resulting TCP connections experience large round-trip times, and the number of hops increases the chances of random drops in the network, which reduces the effective rate at which TCP can communicate.

With TCP optimization, peer discovery places TCP proxies in Branch-1 and Branch-2, which creates three independent TCP connections. The TCP connection between the client and server is split into three TCP connections: Client to Branch-1, Branch-1 to Branch-2, and Branch-2 to server. TCP optimizations, including BBR congestion control and RACK loss detection, are used on the Branch-1 to Branch-2 connection to improve throughput.

Scenario 2: Client Using a Low-Quality Internet Link To Access an Application Hosted in Another Branch

In this scenario, the Branch-4 link is a low-quality internet link that is likely to drop packets. When a user behind Branch-4 tries to download a file from an application server in Branch-2, the goodput is low because of frequent packet drops, resulting in a poor user experience. This problem is solved by implementing proxy mode optimization on Hub-2. Proxy mode optimization splits the end-to-end latency between Branch-4 and Branch-2 at the hub, improving loss recovery and goodput. Alternatively, you can configure a reverse proxy at Branch-2, where BBR and RACK loss detection improve goodput without latency splitting.

Scenario 3: Client Using a VSAT Link To Access a Hub-Hosted Application

In this scenario, Branch-3 is connected to rest of the network over a very small aperture terminal (VSAT) link, which inherently has long delays, in the range of 500 to 1000 milliseconds, and experiences random losses. A user connecting to an application behind Hub-1 experiences a slow connection. TCP optimization in auto mode splits the end-to-end connection into three segments, and the TCP connection between Branch-3 and Hub-1 uses BBR and RACK loss detection to improve throughput.

Scenario 4: Client Using a Low-Quality Internet Link To Access a Cloud-Based Application

TCP optimization is useful when users behind a lossy link try to access cloud-based applications. When a local breakout is used, the resulting user experience is degraded because of losses introduced on the access link.

In this scenario, it is important for the TCP connection to have a short round-trip time so that it can recover more quickly from the lost packets. Splitting the TCP connection between Branch-4 and Hub-2 (short round-trip time) and between Hub-2 and the cloud-based SaaS applications (longer round-trip time) allows the losses on the lossy internet connection to be recovered faster and improves the end-to-end user experience.

Verify TCP Optimization

You can verify TCP optimization using show commands available through the VOS CLI.

View TCP-Optimized Sessions

When TCP optimization is enabled, you can view a list of sessions for which TCP optimization is in effect.

To view optimized TCP sessions, issue the show orgs org org-name sessions tcp-terminated brief CLI command. The command output should be similar to the example below, which shows TCP optimization is in effect for the session with ID 1809.

admin@SDWAN-Branch1-cli> show orgs org Versa-org sessions tcp-terminated brief VSN VSN SESS DESTINATION SOURCE DESTINATION TCP ID VID ID SOURCE IP IP PORT PORT PROTOCOL NATTED SDWAN APPLICATION OPTIMIZED STATE STATE ------------------------------------------------------------------------------------------------------------------------------------------------ 0 2 1809 10.70.76.100 10.70.73.100 53502 23 6 No Yes telnet/(predef) Yes Established Established

View Details for TCP Sessions and Connections

You can view details for each TCP-optimized session and check the parameters of TCP connections, such as congestion control, loss detection algorithms, and send or receive buffer sizes.

To view session details, issue the show orgs org org-name sessions tcp-terminated detail CLI command. The command output should be similar to the example below.

admin@SDWAN-Branch1-cli> show orgs org Versa-org sessions tcp-terminated detail sessions tcp-terminated detail 0 2 1809 source-ip 10.70.76.100 destination-ip 10.70.73.100 source-port 53502 destination-port 23 protocol 6 natted No sdwan Yes application telnet/(predef) tcp-optimized Yes client state Established client send-queue-count 0 client retransmit-queue-count 0 client max-send-buffer 8388608 client send-window 3737600 client send-window-scale-factor 0 client send-space 32768 client max-receive-buffer 8388608 client receive-window 65559 client receive-window-scale-factor 7 client advertised-window 65535 client send-maximum-segment-size 520 client recovery-algorithm pipe client loss-detection duplicate-ack client congestion-algorithm cubic client congestion-state open client slow-start-threshold 2097120 client congestion-window 11680 client retransmission-timeout 1000 client smoothed-rtt 0 client rtt-variance 0 client send-high-water-mark 32768 client receive-high-water-mark 65535 client reordering 3 client number-rtos 0 client fast-recoveries 0 client peer-id 0 client tcp-options "SACK|TStamp|WndScale" server state Established server send-queue-count 0 server retransmit-queue-count 0 server max-send-buffer 8388608 server send-window 65280 server send-window-scale-factor 8 server send-space 32768 server max-receive-buffer 8388608 server receive-window 65535 server receive-window-scale-factor 8 server advertised-window 65535 server send-maximum-segment-size 532 server recovery-algorithm pipe server loss-detection rack server congestion-algorithm cubic server congestion-state open server slow-start-threshold 2097120 server congestion-window 1088 server retransmission-timeout 201 server smoothed-rtt 1 server rtt-variance 50 server send-high-water-mark 32768 server receive-high-water-mark 65535 server reordering 3 server number-rtos 0 server fast-recoveries 0 server peer-id 113 server tcp-options "SACK|TStamp|WndScale|PeerDisc" (END)

Troubleshoot TCP Optimization

TCP optimization is applied through SD-WAN policy rules. If you do not see the expected results when you verify TCP optimization, you can troubleshoot the issue using VOS CLI commands to check the SD-WAN policy rules and statistics.

Check the SD-WAN Policy Rules

If the expected TCP session does not show up in the list of TCP-optimized sessions, you should confirm whether the session matches the correct SD-WAN policy rule.

Issue the following CLI command to display session information based on the criteria that you select:

show orgs org org-name sessions extensive | select source-ip src-ip | select destination-ip dst-ip | select destination-port dst-port | match-all | select forward-sdwan-rule-name

For each session listed in the command output, the Forward SD-WAN Rule Name field shows the rule that matches the session. If this field is blank ("-"), or if the rule shown is not the one you expect to match the session, check the match criteria for the SD-WAN policy rule to determine the cause of the mismatch.

The example output shows the SD-WAN policy rule that matches the session. For example, the output below shows that the session with ID 1612 matches the rule named TCP-OPT-Rule.

admin@SDWAN-Branch1-cli> show orgs org Versa-org sessions extensive | select source-ip 10.70.76.100 | select destination-ip 10.70.73.100 | select destination-port 22 | match-all | select forward-sdwan-rule-name

FORWARD

VSN VSN SESS SDWAN RULE

ID VID ID NAME

------------------------------

0 2 1612 TCP-OPT-Rule

Check the SD-WAN Policy Rule Statistics

If the session matches the expected SD-WAN rule, but does not show up in the list of optimized TCP sessions, you can check the statistics for the SD-WAN policy rule related to TCP optimization.

Issue the following CLI command to display SD-WAN policy rule statistics:

show orgs org-services org-name sd-wan policies default-policy rules statistics tcp-optimization brief [sd-wan-rule-name]

The command output includes the following parameters to help with troubleshooting:

- sessions-optimized—Shows how many sessions, which matched this SD-WAN rule, were optimized.

- sessions-bypassed—Shows how many sessions, which matched this SD-WAN rule, were bypassed from TCP optimization. For bypassed sessions, you can see per-reason counters.

- peer-discovery-success—If Auto mode is selected, there should be a non-zero value, indicating successful discovery of remote peers with TCP optimization.

- bypass-no-peer—Number of sessions bypassed because no TCP optimization peer was found (in case of Auto mode). You may need to check whether the remote peer has a corresponding SD-WAN rule with TCP optimization, matching this session (sometimes it may be expected setup).

- bypass-non-syn—Growing number may indicate that there can be asymmetric routing in place, and TCP session doesn’t flow this router in both directions.

- bypass-latency—Number of sessions bypassed because the latency was below configured threshold (in SD-WAN rule’s TCP optimization section).

- bypass-no-resources—Number of sessions bypassed due to insufficient resources (mbuf).

Note that these are cumulative counters, which start counting at the time of a router restart, creation of an SD-WAN rule, or a clearing of statistics.

The example output below shows statistics for the policy rule TCP-OPT-Rule:

admin@SDWAN-Branch1-cli> show orgs org-services Versa-org sd-wan policies Default-Policy rules statistics tcp-optimization brief rules statistics tcp-optimization brief TCP-OPT-Rule sessions-optimized 2 sessions-bypassed 20 peer-discovery-success 1 splice-success 0 bypass-configured 0 bypass-non-tcp 19 bypass-non-syn 0 bypass-tracking-failure 0 bypass-split-failure 0 bypass-already-terminated 0 bypass-no-resources 0 bypass-no-peer 0 bypass-latency 1 bypass-error 0 bypass-inv-peer-id 0 bypass-non-syn-ack 0 bypass-inv-syn-ack 0 bypass-inv-last-ack 0 client fast-recoveries 0 client average-fast-recovery-time 0 client rto-recoveries 0 client average-rto-recovery-time 0 client tlp-recoveries 0 server fast-recoveries 0 server average-fast-recovery-time 0 server rto-recoveries 0 server average-rto-recovery-time 0 server tlp-recoveries 0

You can use the remote-branch option to display statistics specific to a remote branch, which is helpful when troubleshooting issues towards particular branch:

show orgs org-services org-name sd-wan policies default-policy rules statistics tcp-optimization remote-branch [sd-wan-rule-name] [remote-branch-name]

To view the number of TCP-optimized sessions for each policy rule, issue the following CLI command:

show orgs org-services org-name sd-wan policies default-policy rules statistics tcp-optimization brief sessions-optimized

The command output displays cumulative counters of the sessions optimized for each policy rule, in table view. For example:

-cli> show orgs org-services Versa-org sd-wan policies Default-Policy rules statistics tcp-optimization brief sessions-optimized

SESSIONS

NAME OPTIMIZED

---------------------------

TCP-OPT-Rule 4

MATCH-ALL-Rule 0

In Releases 22.1.4 and later, you can use the following command to view the counter for the current TCP-optimized sessions on the device as part of sessions summary statistics for the organization:

show orgs org org-name sessions summary

For example:

-cli> show orgs org Versa-org sessions summary

NAT NAT NAT SESSION TCP UDP ICMP OTHER TCP OPTIMIZED

VSN SESSION SESSION SESSION SESSION SESSION SESSION SESSION COUNT SESSION SESSION SESSION SESSION SESSION

ID COUNT CREATED CLOSED COUNT CREATED CLOSED FAILED MAX COUNT COUNT COUNT COUNT COUNT

----------------------------------------------------------------------------------------------------------------------------

0 1 18647 18646 0 4815 4815 0 100000 1 0 0 0 1

This value is also available through SNMP OID 1.3.6.1.4.1.42359.2.2.1.2.4.1.16.

Supported Software Information

Releases 21.1 and later support all content described in this article, except:

- In Releases 21.1.1, increase the maximum send and receive buffer sizes from 8 MB to 16MB; add support for forward proxy and reverse proxy TCP optimization modes.